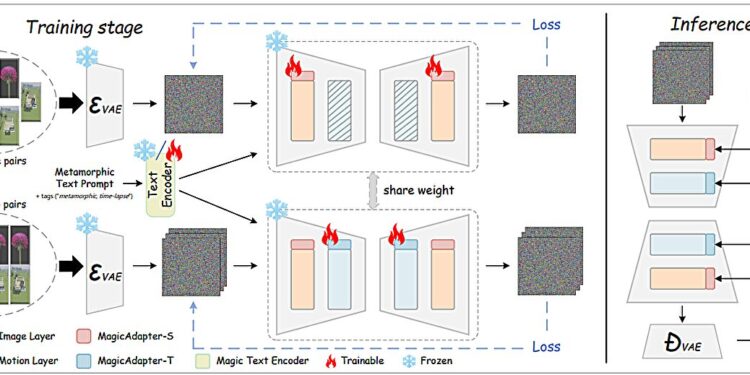

Overview of the proposed approach. Credit: arxiv: DOI: 10.48550 / Arxiv.2404.05014

While the models of artificial intelligence of text in video like Sora of Openai metamorphosing quickly before our eyes, they had trouble producing metamorphic videos. Simulation of a tree germination or a flower flower is more difficult for AI systems than generating other types of videos because it requires knowledge of the physical world and can vary considerably.

But now these models have taken an evolutionary step.

Computer scientists of the University of Rochester, the University of Beijing, the University of California, Santa Cruz and the National University of Singapore have developed a new model of IA video text which learns the physical knowledge of the real world from accelerated videos. The team describes their model, magicm, in an article published in IEEE transactions on model analysis and machine intelligence.

“Artificial intelligence has been developed to try to understand the real world and simulate the activities and events that take place,” said Jinfa Huang, a doctorate. Student supervised by Professor Jiebo Luo of the IT department of Rochester, both among the authors of the newspaper. “The time of magicality is a step towards AI which can better simulate the physical, chemical, biological or social properties of the world around us.”

Previous models have generated videos that generally have a limited movement and bad variations. To train AI models to imitate metamorphic processes more effectively, researchers have developed a high -quality data set of more than 2,000 videos accelerated with detailed legends.

Currently, the Open-Source U-Net version of magicality generates two-second clips, 512 per 512 pixels (8 images per second), and a distribution transformer architecture which accompanies it extends this to 10 seconds clips. The model can be used to simulate not only biological metamorphosis, but also buildings under construction or bread cooking in the oven.

But although the generated videos are visually interesting and that the demo can be fun to play, the researchers consider it an important step towards more sophisticated models that could provide important tools to scientists.

“Our hope is that one day, for example, biologists could use a generative video to accelerate the preliminary exploration of ideas,” said Huang. “While physical experiences remain essential for final verification, precise simulations can shorten the iteration cycles and reduce the number of tests directly.”

More information:

Shenghai Yuan et al, Magicm: Accelerated video generation models as metamorphic simulators, IEEE transactions on model analysis and machine intelligence (2025). DOI: 10.1109 / TPAMI.2025.3558507. On arxiv: DOI: 10.48550 / Arxiv.2404.05014

Supplied by the University of Rochester

Quote: Text-to-Video Ai Blossoms with new metamorphic video capacities (2025, May 5) recovered on May 5, 2025 from

This document is subject to copyright. In addition to any fair program for private or research purposes, no part can be reproduced without written authorization. The content is provided only for information purposes.