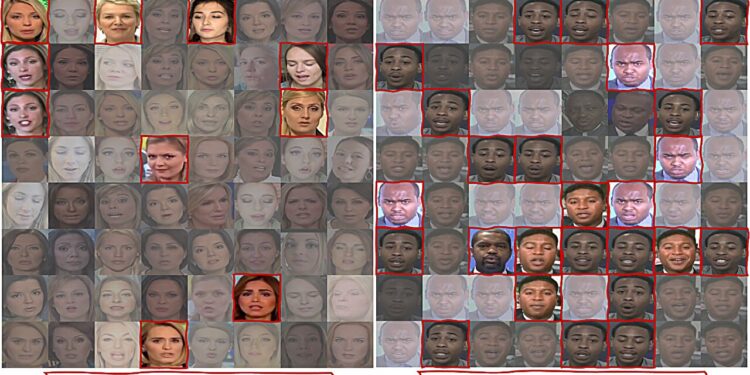

Deepfake detection algorithms often perform differently by race and gender, including a higher false positive rate among black men than white women. New algorithms developed at the University at Buffalo aim to reduce these gaps. Credit: Siwei Lyu

The image spoke for itself. Siwei Lyu, a computer scientist and deepfake expert at the University at Buffalo, created a photo collage from the hundreds of faces that his detection algorithms had incorrectly classified as fake — and the new composition clearly had a predominantly darker skin tone.

“The accuracy of a detection algorithm should be statistically independent of factors such as race,” says Lyu, “but it is obvious that many existing algorithms, including ours, inherit bias.”

Lyu, Ph.D., co-director of UB’s Center for Information Integrity, and his team have developed what they believe are the first-ever deepfake detection algorithms specifically designed to be less biased.

Their two machine learning methods – one that makes algorithms aware of demographic data and another that leaves them blind to that data – have reduced accuracy disparities between races and genders, while in some cases improving overall accuracy.

The research, published on the arXiv preprint server, was presented at the Winter Applications of Computer Vision Conference (WACV), held January 4-8.

Lyu, the study’s lead author, collaborated with his former student, Shu Hu, Ph.D., now an assistant professor of computer science and information technology at Indiana University-Purdue University of Indianapolis, as well as with George Chen, Ph.D., assistant professor of information systems at Carnegie Mellon University. Other contributors include Yan Ju, a Ph.D. student in Lyu’s Media Forensic Lab at UB and postdoctoral researcher Shan Jia.

Ju, the study’s first author, says detection tools often face less scrutiny than the artificial intelligence tools they control, but that doesn’t mean they shouldn’t be held accountable either.

“Deepfakes have disrupted society so much that the research community was eager to find a solution,” she says, “but even though these algorithms were created for a good cause, we must always be aware of their collateral consequences.”

Demographic awareness or demographic agnostic

Recent studies have found large disparities in the error rates of deepfake detection algorithms (up to 10.7% difference in one study) between different races. In particular, it has been shown that some people are better at guessing the authenticity of light-skinned subjects than those with darker skin.

This can result in some groups being more likely to have their real image seen as false, or perhaps even more damaging, a falsified image of themselves seen as real.

The problem is not necessarily with the algorithms themselves, but with the data they were trained on. Middle-aged white men are often overrepresented in these photo and video datasets, so the algorithms are better at analyzing them than underrepresented groups, says Lyu, a SUNY Empire professor in the department of computer science and technology. engineering at UB, within the School of Engineering and Applied Sciences.

“Let’s say one demographic group has 10,000 samples in the dataset and the other only has 100. The algorithm will sacrifice accuracy on the smaller group in order to minimize errors on the larger group “, he adds. “So this reduces overall errors, but at the expense of the smaller group.”

While other studies have attempted to make databases more demographically balanced — a process that takes time — Lyu says his team’s study is the first attempt to actually improve the fairness of algorithms themselves.

To explain their method, Lyu uses an analogy of a teacher being evaluated by students’ test scores.

“If a teacher has 80 students who do well and 20 students who do poorly, they will still get a pretty good average,” he says. “Instead, we want to give a grade point average to students in the middle, forcing them to focus more on everyone else rather than the dominant group.”

First, their demographic method provided algorithms with datasets labeling subjects’ gender (male or female) and race (white, black, Asian, or other) and asked them to minimize errors about less-represented groups.

“We basically tell the algorithms that we care about the overall performance, but we also want to ensure that the performance of each group meets certain thresholds, or at least is much lower than the overall performance,” Lyu explains.

However, datasets are generally not labeled based on race and gender. So the team’s demographic-agnostic method classifies deepfake videos not based on subject demographics, but based on features of the video that are not immediately visible to the human eye.

“Maybe a group of videos in the dataset matches a particular demographic or maybe some other characteristic of the video, but we don’t need demographic information to identify them,” Lyu explains. “This way we don’t need to select which groups should be emphasized. Everything is automated based on the groups that make up that middle slice of data.”

Improve fairness and accuracy

The team tested their methods using the popular FaceForensic++ dataset and the state-of-the-art detection algorithm Xception. This improved all of the algorithm’s fairness measures, such as equal false positive rates across races, with the demographics-aware method performing the best.

More importantly, Lyu says, their methods actually increased the algorithm’s overall detection accuracy, from 91.49% to 94.17%.

However, when using the Xception algorithm with different datasets and the FF+ dataset with different algorithms, the methods, while improving most fairness measures, slightly reduced the overall accuracy of detection.

“There may be a small trade-off between performance and fairness, but we can guarantee that performance degradation is limited,” says Lyu. “Of course, the fundamental solution to the bias problem is to improve the quality of data sets, but for now we need to build fairness into the algorithms themselves.”

More information:

Yan Ju et al, Improving fairness in deepfake detection, arXiv (2023). DOI: 10.48550/arxiv.2306.16635

arXiv

Provided by University at Buffalo

Quote: Team develops new deepfake detector designed to be less biased (January 16, 2024) retrieved January 16, 2024 from

This document is subject to copyright. Except for fair use for private study or research purposes, no part may be reproduced without written permission. The content is provided for information only.