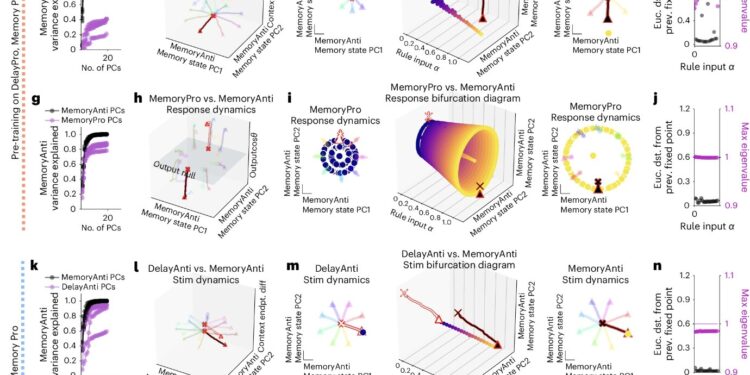

Dynamic patterns have been reused for rapid learning of new tasks with familiar computational elements. Credit: Neuroscience of Nature (2024). DOI: 10.1038/s41593-024-01668-6

Cognitive flexibility, the ability to quickly switch from one thought to another and from one mental concept to another, is a highly advantageous human ability. This essential ability promotes multitasking, rapid acquisition of new skills, and adaptation to new situations.

Although artificial intelligence (AI) systems have advanced exponentially in recent decades, they do not yet demonstrate the same flexibility as humans in learning new skills and switching between tasks. A better understanding of how biological neural circuits support cognitive flexibility, particularly multitasking, could inform future efforts to develop more flexible AI.

Recently, computer scientists and neuroscientists have studied neural computations using artificial neural networks. However, most of these networks have typically been trained to tackle specific tasks individually rather than multiple tasks.

In 2019, a research group from New York University, Columbia University, and Stanford University trained a single neural network to perform 20 related tasks.

In a new article published in Neuroscience of NatureA team at Stanford set out to study what allows this neural network to perform modular calculations, thus tackling several different tasks.

“Flexible computation is a hallmark of intelligent behavior,” Laura N. Driscoll, Krishna Shenoy, and David Sussillo wrote in their paper. “However, little is known about how neural networks contextually reconfigure themselves for different computations. In the present work, we identify an algorithmic neural substrate for modular computation through the study of multitask artificial recurrent neural networks.”

The main goal of Driscoll, Shenoy, and Sussillo’s recent study was to investigate the mechanisms underlying computations in recurrently connected artificial neural networks. Their efforts have allowed the researchers to identify a computational substrate of these networks that enables modular computations, a substrate they describe as “dynamic motifs.”

“Dynamic systems analyses revealed learned computational strategies that reflected the modular structure of the subtasks across the training tasks,” Driscoll, Shenoy, and Sussillo wrote. “Dynamic motifs, which are recurring patterns of neural activity that implement specific computations through dynamics, such as attractors, decision boundaries, and rotations, were reused across tasks. For example, tasks requiring memorization of a continuous circular variable reused the same ring attractor.”

The researchers conducted a series of analyses that revealed that in convolutional neural networks, so-called dynamic patterns are implemented by groups of units when the activation function of the unit is limited to a positive value. In addition, it was found that damage to these units negatively impacts the ability of the networks to perform modular computations.

“The motifs were reconfigured for rapid transfer learning after an initial training phase,” Driscoll, Shenoy, and Sussillo wrote. “This work establishes dynamic motifs as a fundamental unit of compositional computation, intermediate between the neuron and the network. As whole-brain studies record the activity of multiple specialized systems simultaneously, the dynamic motif framework will guide questions about specialization and generalization.”

Overall, this team of researchers’ recent study identifies a substrate of convolutional neural networks that significantly contributes to their ability to tackle multiple tasks efficiently. In the future, the results of this work could inform research in neuroscience and computer science, potentially leading to a better understanding of the neural processes that underlie cognitive flexibility and informing the development of new strategies that mimic these processes in artificial neural networks.

More information:

Laura N. Driscoll et al, Flexible multitasking computing in recurrent networks uses shared dynamic patterns, Neuroscience of Nature (2024). DOI: 10.1038/s41593-024-01668-6

© 2024 Science X Network

Quote:Flexible multitasking computation in recurrent neural networks relies on dynamic patterns, study finds (2024, August 16) retrieved August 17, 2024 from

This document is subject to copyright. Apart from any fair dealing for the purpose of private study or research, no part may be reproduced without written permission. The content is provided for informational purposes only.