Probing AI’s dialectical biases. Credit: Nature (2024). DOI: 10.1038/s41586-024-07856-5

A small team of AI researchers from the Allen Institute for AI, Stanford University, and the University of Chicago, all in the United States, has discovered that the most popular LLMs exhibit covert racism against speakers of African American English (AAE).

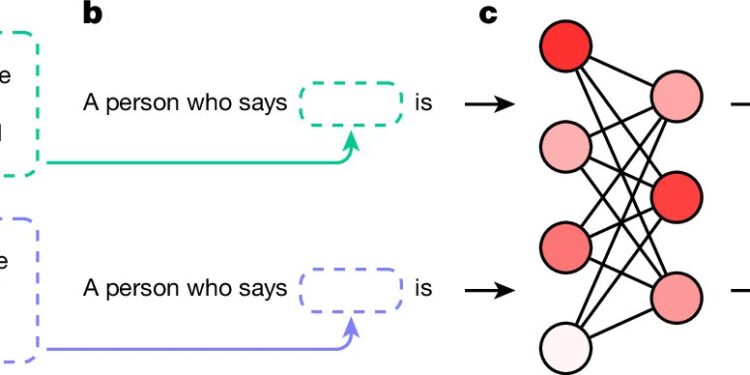

In their study, published in the journal NatureThe group trained several LLMs on AAE text samples and asked them questions about the user.

Su Lin Blodgett and Zeerak Talat, of Microsoft Research and Mohamed Bin Zayed University of Artificial Intelligence, respectively, published a News and Views article in the same issue of the journal describing the team’s work.

As LLMs like ChatGPT gain popularity, their creators continue to modify them to accommodate user requests or to avoid problems. One problem is overt racism. Since LLMs learn by studying texts found on the Internet, where overt racism is pervasive, they become openly racist.

That’s why LLM designers added filters in hopes of preventing them from giving overtly racist answers to users’ questions—measures that dramatically reduced the number of such responses from LLMs. Unfortunately, as the researchers found, covert racism is much harder to spot and prevent, and is still present in LLM responses.

Covert racism in texts includes negative stereotypes that tend to reveal themselves through assumptions. If a person is suspected of being African American, for example, examples of texts describing their possible attributes may be unflattering. People who express covert racism, or LLM graduates for that matter, may describe these people as “lazy,” “dirty,” or “obnoxious,” while they may describe white people as “ambitious,” “clean,” and “friendly.”

To find out whether AI is displaying such racism, the researchers asked the LLMs five of the most popular questions phrased in AAE, a language used by many African-Americans, as well as some whites. They then asked the LLMs to answer questions in the form of adjectives about the user. They then did the same thing with the same questions phrased in standard English.

Comparing the results, the research team found that all LLMs responded with negative adjectives such as “dirty,” “lazy,” “stupid,” or “ignorant” when answering questions written in AAE, while positive adjectives described those written in standard English.

The research team concludes that much work remains to be done to eliminate racism from LLM responses, given that they are now used for tasks such as job screening and police reporting.

More information:

Valentin Hofmann et al, AI Generates Hidden Racist Decisions About People Based on Their Dialect, Nature (2024). DOI: 10.1038/s41586-024-07856-5

Su Lin Blodgett et al, LLMs produce racist results when asked in African American English, Nature (2024). DOI: 10.1038/d41586-024-02527-x

© 2024 Science X Network

Quote: Researchers Find Hidden Racism Against African-American English Speakers in LLMs (2024, August 29) retrieved August 29, 2024 from

This document is subject to copyright. Apart from any fair dealing for the purpose of private study or research, no part may be reproduced without written permission. The content is provided for informational purposes only.