Credit: Simon Fraser University

Despite significant progress in developing AI systems capable of understanding the physical world as humans do, researchers are struggling to model a certain aspect of our visual system: the perception of light.

“Determining the influence of light on a given photograph is a bit like trying to separate the ingredients of an already-baked cake,” says Chris Careaga, a Ph.D. student in SFU’s Computational Photography Lab. The task requires undoing the complex interactions between light and surfaces in a scene. This problem is called intrinsic decomposition and has been studied for almost half a century.

In a new article published in the journal ACM Transactions on Charts, researchers at Simon Fraser University’s Computational Photography Lab, are developing an AI approach to intrinsic decomposition that works across a wide range of images. Their method automatically separates an image into two layers: one with only lighting effects and one with the true colors of objects in the scene.

“The main innovation behind our work is to create a system of neural networks individually tasked with solving simpler problems. They work together to understand the lighting in a photograph,” adds Careaga.

Although intrinsic decomposition has been studied for decades, SFU’s new invention is the first in the field to accomplish this task for any HD image a person might take with their camera.

“By changing lighting and colors separately, a whole range of CGI and VFX-only applications become possible for regular image editing,” says Dr. Yağız Aksoy, who heads the Computational Photography Lab at SFU .

“This physical understanding of light makes it a valuable and accessible tool for content creators, photo editors and post-production artists, as well as new technologies such as augmented reality and spatial computing.”

The group has since extended its intrinsic decomposition approach, applying it to the problem of image composition. “When you insert an object or person from one image into another, it’s usually obvious that it’s been edited because the lighting and colors don’t match,” says Careaga.

“Using our intrinsic decomposition technique, we can change the lighting of the inserted object to make it more realistic in the new scene.” In addition to publishing a paper on this subject, presented at SIGGRAPH Asia last December, the group has also developed a computer interface allowing users to interactively edit the lighting of these “composite” images. S. Mahdi H. Miangoleh, Ph.D. student in Aksoy’s laboratory, also contributed to this work.

Aksoy and his team plan to extend their methods to video for use in film post-production, and further develop AI’s capabilities in interactive lighting editing. They emphasize a creativity-driven approach to AI in film production, aiming to empower independent and low-budget productions.

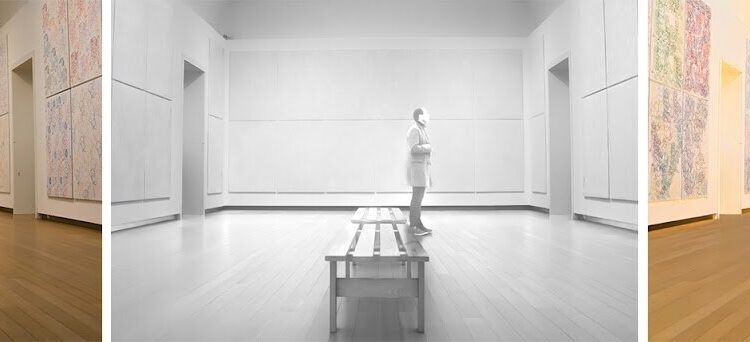

To better understand the challenges of these production contexts, the group developed a computational photography studio on the Simon Fraser University campus, where they conduct research in an active production environment.

The posts above represent some of the group’s first steps toward providing AI-based editing capabilities to British Columbia’s rich film industry.

The emphasis on intrinsic decomposition allows even low-budget productions to easily adjust lighting, without the need for costly rework. These innovations support local filmmakers, maintaining British Columbia’s position as a global filmmaking hub, and will serve as the foundation for many other AI-based applications that will come from SFU’s Computational Photography Lab.

More information:

Chris Careaga et al, Intrinsic Image Decomposition via Ordinal Shading, ACM Transactions on Charts (2023). DOI: 10.1145/3630750

GitHub repository: github.com/compphoto/Intrinsic

Provided by Simon Fraser University

Quote: Researchers develop AI capable of understanding light in photographs (February 21, 2024) retrieved February 21, 2024 from

This document is subject to copyright. Apart from fair use for private study or research purposes, no part may be reproduced without written permission. The content is provided for information only.