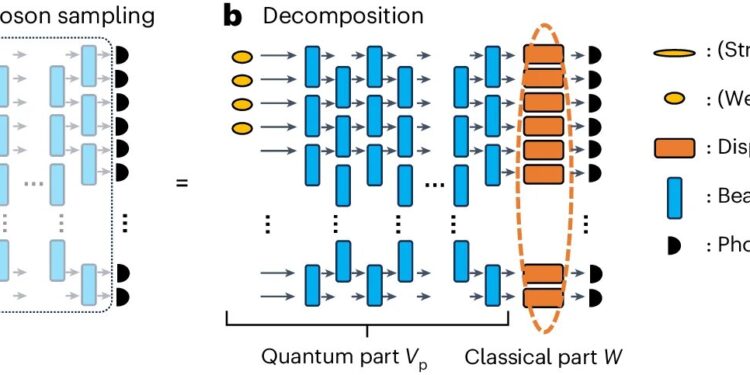

Decomposition of a lossy GBS circuit. Credit: Physics of nature (2024). DOI: 10.1038/s41567-024-02535-8

In an exciting development for quantum computing, researchers from the University of Chicago’s Department of Computer Science, the Pritzker School of Molecular Engineering, and Argonne National Laboratory have introduced a classical algorithm that simulates Gaussian boson sampling (GBS) experiments.

This breakthrough not only clarifies the complexities of current quantum systems, but also represents a significant advance in our understanding of how quantum computing and classical computing can work together. The research appears in Physics of nature.

The challenge of Gaussian boson sampling

Gaussian boson sampling has emerged as a promising approach to demonstrate quantum advantage, that is, the ability of quantum computers to perform tasks that classical computers cannot perform efficiently. The path to this breakthrough was marked by a series of innovative experiments that tested the limits of quantum systems.

Previous studies have shown that classical computers struggle to simulate GBS under ideal conditions. However, assistant professor and author Bill Fefferman pointed out that the noise and photon loss present in real experiments create additional challenges that require careful analysis.

Experiments (like these) by teams from major research centers at the University of Science and Technology of China and Xanadu, a Canadian company specializing in quantum systems, have notably shown that while quantum devices can produce results consistent with GBS predictions, the presence of noise often obscures these results, raising questions about the claimed quantum advantage. These experiments have served as the basis for current research, pushing scientists to refine their approaches to GBS and better understand its limitations.

Understanding noise in quantum experiments

“While theoretical foundations have established that quantum systems can outperform classical systems, the noise present in real experiments introduces complexities that require rigorous analysis,” Fefferman explained. “Understanding how noise affects performance is critical as we strive to implement practical applications of quantum computing.”

This new algorithm addresses these complexities by exploiting the high photon loss rates common in current GBS experiments to provide a more efficient and accurate simulation. The researchers used a classical tensor network approach that capitalizes on the behavior of quantum states in these noisy environments, making the simulation more efficient and manageable with available computational resources.

Revolutionary results

Remarkably, the researchers found that their classical simulation performed better than some state-of-the-art GBS experiments in various benchmark tests.

“What we’re seeing is not a failure of quantum computing, but rather an opportunity to refine our understanding of its capabilities,” Fefferman said. “This allows us to improve our algorithms and push the boundaries of what we can achieve.”

The algorithm outperformed experiments in accurately capturing the ideal distribution of GBS output states, raising questions about the quantum advantage claimed by existing experiments. This finding paves the way for improving the design of future quantum experiments, suggesting that improving photon transmission rates and increasing the number of squeezed states could significantly increase their efficiency.

Implications for future technologies

The implications of these findings extend beyond the field of quantum computing. As quantum technologies continue to evolve, they have the potential to revolutionize fields such as cryptography, materials science, and drug discovery. For example, quantum computing could lead to advances in secure communication methods, enabling more robust protection of sensitive data.

In materials science, quantum simulations can help discover new materials with unique properties, paving the way for technological advances, energy storage, and manufacturing. By advancing our understanding of these systems, researchers are laying the foundation for practical applications that could change the way we approach complex problems across a variety of industries.

The quest for quantum advantage is not just an academic endeavor; it has real-world implications for industries that rely on complex computations. As quantum technologies mature, they have the potential to play a critical role in optimizing supply chains, improving artificial intelligence algorithms, and enhancing climate modeling.

Collaboration between quantum and classical computing is crucial to achieving these advances, as it allows researchers to exploit the strengths of both paradigms.

A cumulative research effort

Fefferman worked closely with Professor Liang Jiang of the Pritzker School of Molecular Engineering and former postdoctoral fellow Changhun Oh, now an assistant professor at the Korea Advanced Institute of Science and Technology, on previous work that led to this research.

In 2021, they investigated the computational power of noisy intermediate-scale quantum (NISQ) devices through lossy boson sampling. The paper found that photon loss affects classical simulation costs as a function of the number of input photons, which could lead to exponential savings in classical time complexity.

Their second paper then focused on the impact of noise in experiments designed to demonstrate quantum supremacy, showing that even with significant noise, quantum devices can still produce results that are difficult for classical computers to match. In their third paper, they explored Gaussian boson sampling (GBS) by proposing a new architecture that improves programmability and resilience against photon loss, making large-scale experiments more feasible.

They then introduced a classical algorithm in their fourth paper that generates results closely aligned with ideal boson sampling, improving benchmarking techniques and highlighting the importance of carefully selecting experiment sizes to preserve the quantum signal amidst noise.

Finally, in their latest study, they developed quantum-inspired classical algorithms to solve graph theory problems such as finding the densest k-subgraph and the maximum-weight clique, as well as a quantum chemistry problem called molecular vibrational spectra generation. Their results suggest that the claimed advantages of quantum methods may not be as great as previously thought, as their classical sampler works similarly to the Gaussian boson sampler.

The development of the classical simulation algorithm not only improves our understanding of Gaussian boson sampling experiments, but also highlights the importance of continuing research in quantum and classical computing. The ability to simulate GBS more efficiently serves as a bridge to more powerful quantum technologies, helping to overcome the complexities of modern challenges.

More information:

Changhun Oh et al, Classical algorithm for simulation of experimental Gaussian boson sampling, Physics of nature (2024). DOI: 10.1038/s41567-024-02535-8

Provided by the University of Chicago

Quote:New classical algorithm improves understanding of future of quantum computing (2024, September 11) retrieved September 11, 2024 from

This document is subject to copyright. Apart from any fair dealing for the purpose of private study or research, no part may be reproduced without written permission. The content is provided for informational purposes only.