Credit: Trends in cognitive science (2024). DOI: 10.1016/j.tics.2024.09.005

Imagine a child visiting a farm and seeing sheep and goats for the first time. Their parent tells them what it is, helping the child learn to distinguish between the two. But what happens when the child does not benefit from this advice during a return visit? Will they still be able to tell them apart?

Neuroscientist Franziska Bröker studies how humans and machines learn without supervision, like a child alone, and has discovered a conundrum: unsupervised learning can either help or hinder progress, depending on certain conditions. The article is published in Trends in cognitive science.

In the world of machine learning, algorithms thrive on unsupervised data. They analyze large volumes of information without explicit labels, while still managing to learn useful patterns. This success raised the question: If machines can learn so well this way, why do humans struggle in similar situations?

According to recent studies, the answer may lie in how we make predictions and reinforce them in the absence of feedback. In other words, results depend on how well our inner understanding of a task matches what it actually requires.

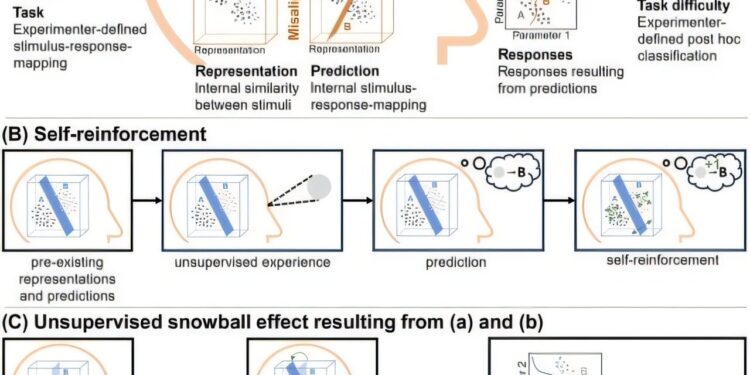

Research shows that humans, like machines, use predictions to make sense of new information. For example, if someone believes that wooliness is the main difference between sheep and goats, they might incorrectly classify a woolly goat as a sheep. When no one is there to correct this error, their incorrect prediction is reinforced, making it even more difficult to learn the correct difference. This “self-reinforcing” process can lead to a snowball effect: if their initial hypothesis is correct, learning improves, but if it is wrong, they can get stuck in a loop of false beliefs.

This phenomenon does not only apply to animal identification. From learning a musical instrument to mastering a new language, the same dynamic is observed. Without guidance and feedback, people often reinforce incorrect methods, making their mistakes more difficult to correct later.

Research suggests that unsupervised learning works best when a person’s initial understanding is already somewhat aligned with the task. For more difficult tasks, such as learning complex linguistic rules or difficult motor skills, feedback is essential to avoid these pitfalls.

Ultimately, the mixed results of unsupervised learning tell a larger story: It’s not a question of if learning without feedback works, but when and how. As humans and machines continue to learn in more complex environments, understanding these nuances could lead to better teaching methods, more effective training tools, and perhaps even smarter algorithms, able to better correct themselves, as we do.

Expertise and unsupervised learning

While laboratory studies reveal diverse outcomes of unsupervised learning, understanding its implications in real-world learning scenarios requires examining the acquisition of expertise, which arises from deep learning with varying degrees of supervision.

For example, radiologists receive structured feedback early in their careers, but gradually lose access to explicit supervisory guidance. If unsupervised learning alone could foster expertise, we would expect steady improvement, but the evidence suggests otherwise.

Critics argue that experience is not necessarily an indicator of expertise, as it can only reflect seniority without substantial improvement in skills. Biases, such as confirmation bias, can further distort unsupervised learning by favoring information that fits preconceptions, thereby hindering progress.

Instead, regular feedback on decisions seems necessary for constant improvement. This corresponds to the representation-task alignment framework, which posits that initial feedback helps learners construct accurate mental representations before they can effectively self-regulate their learning.

For example, in learning motor skills, removing feedback early in the learning process can lead to lower performance, while removing it later, when the learner’s predictions are more accurate, helps maintain , or even improve, performance. This highlights that expertise requires not only experience, but also timely supervision during critical phases of learning.

Personal reinforcement in unsupervised learning

Unsupervised learning often relies on self-reinforcing mechanisms, in which learners use their own predictions instead of external validation. This form of learning is well explored in perceptual and category learning, where Hebbian models demonstrate how unsupervised learning can improve or degrade performance depending on the alignment of the learner’s representations with the task.

These models successfully accounted for semi-supervised categorization, such as children’s acquisition of linguistic labels, suggesting that self-reinforcement can shape learning trajectories.

However, the absence of feedback, particularly in the acquisition of expertise, can lead to the perpetuation of erroneous predictions, as demonstrated by stereotypes. Without external correction, individuals can reinforce their own incorrect predictions, a phenomenon modeled by constructivist coding assumptions.

This can lead to persistent errors because actions are treated as validated even when no feedback is provided, highlighting the role of selective feedback in moderating unsupervised learning.

Internal feedback and neural mechanisms

Self-reinforcement requires internal learning signals that operate independently of external supervision. While the neural bases of learning through external feedback, such as rewards and punishments, are well understood, the mechanisms that govern self-generated feedback are less clear.

New research suggests that brain areas active when processing external feedback are also engaged during inferred feedback, for example when a learner reinforces their own choices. Confidence in one’s decisions, even in the absence of external feedback, appears to be a key driver of self-reinforcement and subjective rewards can shape learning trajectories by reinforcing past decisions.

These internal feedback mechanisms can lead learners into “learning traps,” where they stop exploring alternative strategies and focus only on exploiting their past choices. Neuroimaging studies reveal that preferences are updated only for remembered choices, confirming the role of internal feedback in guiding unsupervised learning.

Additionally, neural replay – a process by which the brain reactivates past experiences during rest – has been associated with self-enhancement, highlighting its role in refining mental representations without external guidance.

Finding the right balance

The literature on expertise, as well as controlled studies of unsupervised learning, supports the idea that self-reinforcement can improve or hinder performance depending on the alignment between the learner’s mental representations. and the task to be accomplished. Although unsupervised learning has potential, it is not a silver bullet. Rather, its effectiveness depends on the complex interplay between existing knowledge, internal cues, and task structure.

Future research should further explore the relationship between unsupervised self-reinforcement and external supervision cues, particularly in real-world learning contexts. This involves studying how these mechanisms interact in human learning, which may involve a unified learning system rather than the separate, task-specific algorithms often used in artificial intelligence.

Integrating insights from neuroscience, psychology, and machine learning will help develop more comprehensive models of human learning, leading to instructional designs that better support lifelong learning and avoid a overconfidence in erroneous conclusions.

Ultimately, understanding the dynamics of unsupervised learning, including its potential pitfalls, will improve educational approaches and support the development of expertise in various fields. By balancing personal reinforcement and critical external feedback, we can optimize learning systems to foster deep, enduring expertise while avoiding the pitfalls of unsupervised overconfidence.

More information:

Franziska Bröker et al, Demystifying unsupervised learning: how it helps and hurts, Trends in cognitive science (2024). DOI: 10.1016/j.tics.2024.09.005

Provided by the Max Planck Society

Quote: Learning without feedback: Neuroscientist helps uncover influence of unsupervised learning on humans and machines (October 18, 2024) retrieved October 18, 2024 from

This document is subject to copyright. Apart from fair use for private study or research purposes, no part may be reproduced without written permission. The content is provided for informational purposes only.