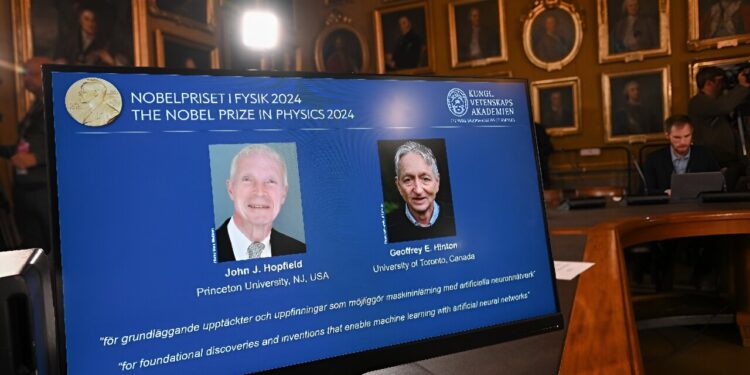

British-Canadian Geoffrey Hinton, known as the “godfather of AI”, and American John Hopfield were awarded the Nobel Prize in Physics in 2024.

The Nobel Prize in Physics was awarded Tuesday to two scientists for their discoveries that laid the foundation for the artificial intelligence used by hugely popular tools such as ChatGPT.

Anglo-Canadian Geoffrey Hinton, known as the “godfather of AI”, and American physicist John Hopfield received the prize for “discoveries and inventions enabling machine learning with artificial neural networks”, said the Nobel Prize jury.

But what is it and what does it all mean? Here are some answers.

What are neural networks and machine learning?

Mark van der Wilk, an expert in machine learning at the University of Oxford, explains to AFP that an artificial neural network is a mathematical construction “vaguely inspired” by the human brain.

Our brain has a network of cells called neurons, which respond to external stimuli, such as things our eyes have seen or our ears have heard, by sending signals to each other.

When we learn things, some connections between neurons become stronger, while others weaken.

Unlike traditional computing, which works more like reading a recipe, artificial neural networks roughly mimic this process.

Biological neurons are replaced with simple calculations, sometimes called “nodes,” and the incoming stimuli they learn from are replaced with training data.

The idea is that this could allow the network to learn over time, hence the term machine learning.

Artificial neural networks are powerful tools for AI.

What did Hopfield discover?

But before machines could learn, another human characteristic was needed: memory.

Have you ever had trouble remembering a word? Consider the goose. You might run through similar words – moron, good, ghoul – before hitting a goose.

“If you’re given a model that isn’t exactly what you need to remember, you need to fill in the blanks,” van der Wilk said.

“That’s how you remember a special memory.”

This is the idea of the “Hopfield network” – also called “associative memory” – that the physicist developed in the early 1980s.

Hopfield’s contribution means that when an artificial neural network receives something wrong, it can look through previously stored patterns to find the closest match.

This represents a major breakthrough for AI.

Anglo-Canadian scientist Geoffrey Hinton, known as the “godfather of AI,” created the Boltzmann machine.

What about Hinton?

In 1985, Hinton revealed his own contribution to the field – or at least one of them – called the Boltzmann machine.

Named after the 19th century physicist Ludwig Boltzmann, the concept introduced an element of chance.

This randomness is ultimately why today’s AI-powered image generators can produce infinite variations for the same prompt.

Hinton also showed that the more layers a network has, “the more complex its behavior can be.”

This made it possible to “effectively learn a desired behavior,” Francis Bach, a French researcher in machine learning, explains to AFP.

What is it for?

Despite the establishment of these ideas, many scientists lost interest in this field in the 1990s.

Machine learning required extremely powerful computers that could handle large amounts of information. It takes millions of images of dogs for these algorithms to be able to distinguish a dog from a cat.

Physicist John Hopfield enabled neural networks to “store and reconstruct images and other types of patterns in data”

So it wasn’t until the 2010s that a wave of breakthroughs “revolutionized everything about image processing and natural language processing,” Bach said.

From reading medical scans to driving self-driving cars to predicting the weather and creating deepfakes, the uses of AI are now too numerous to count.

But is it really physics?

Hinton had already won the Turing Prize, considered the Nobel of computing.

But several experts said it was a well-deserved Nobel Prize in physics that paved the way for the science that would lead to AI.

French researcher Damien Querlioz stressed that these algorithms were originally “inspired by physics, by transposing the notion of energy into the field of computing”.

Van der Wilk said the first Nobel Prize “for the methodological development of AI” recognized the contribution of the physics community, as well as that of the laureates.

And while ChatGPT can sometimes make AI seem truly creative, it’s important to remember the “machine” part of machine learning.

“There is no magic here,” van der Wilk emphasized.

“At the end of the day, in AI, it’s all multiplication and addition.”

© 2024 AFP

Quote: Neural networks, machine learning? The Science of Nobel Prize-Winning AI Explained (October 8, 2024) retrieved October 8, 2024 from

This document is subject to copyright. Except for fair use for private study or research purposes, no part may be reproduced without written permission. The content is provided for informational purposes only.