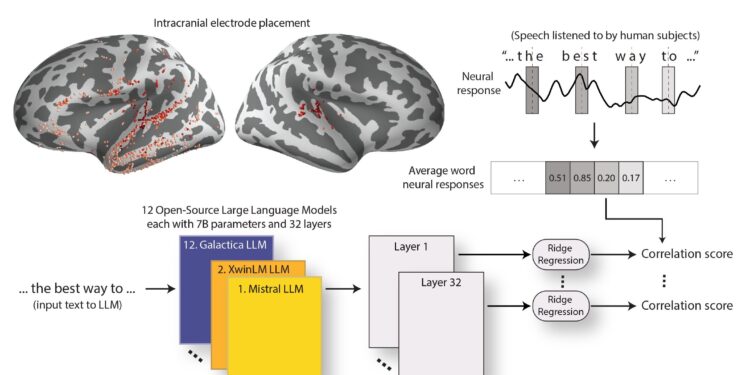

The methodology for predicting brain responses to speech from LLM embeddings, to assess the similarity of various LLMs to the brain. Credit: Gavin Mischler (Figure adapted from Mischler et al., Intelligence of natural machines2024).

Large language models (LLMs), the best known of which is ChatGPT, have become increasingly effective in processing and generating human language in recent years. However, the extent to which these models mimic the neural processes that support language processing by the human brain remains to be elucidated.

Researchers at Columbia University and the Feinstein Institutes for Medical Research Northwell Health recently conducted a study examining the similarities between LLM representations on neuronal responses. Their findings, published in Intelligence of natural machinessuggest that as LLMs become more advanced, they not only function better, but also become more brain-like.

“Our original inspiration for this paper came from the recent explosion in the LLM landscape and neuro-AI research,” Gavin Mischler, first author of the paper, told Tech Xplore.

“A few papers in recent years have shown that GPT-2 word embeddings bear some similarity to word responses recorded from the human brain, but in the rapidly evolving field of AI, GPT-2 is now considered ancient and no longer very powerful.

“Since the release of ChatGPT, many other powerful models have emerged, but little research has been done to explore whether these newer, bigger, better models still exhibit the same brain similarities.”

The main goal of the recent study by Mischler and colleagues was to determine whether the latest LLMs also have similarities to the human brain. This could improve understanding of artificial intelligence (AI) and the brain, particularly in terms of how they analyze and produce language.

The researchers looked at 12 different open source models developed over the past several years, which feature nearly identical architectures and a similar number of parameters. At the same time, they also recorded neuronal responses in the brains of neurosurgical patients as they listened to speech, using electrodes implanted in their brains as part of their treatment.

“We also gave the text of the same speech to the LLMs and extracted their embeddings, which are essentially the internal representations that the different layers of an LLM use to encode and process the text,” Mischler explained.

“To estimate the similarity between these models and the brain, we tried to predict recorded neural responses to words from word embeddings. The ability to predict brain responses from word embeddings gives us an idea of the similarity between the two.”

After collecting their data, the researchers used computational tools to determine how aligned the LLMs and the brain were. They specifically examined which layers of each LLM showed the greatest correspondence with brain regions involved in language processing, in which neural responses to speech are known to gradually “build” linguistic representations by examining acoustic components, phonetic and possibly more abstract speech.

“First, we found that as LLMs become more powerful (for example, as they better answer questions like ChatGPT), their embeddings become more similar to the brain’s neural responses to language ” said Mischler.

“More surprisingly, as LLM performance increases, so does their alignment with the brain’s hierarchy. This means that the amount and type of information extracted in successive brain regions during language processing aligns better with the information extracted by the successive layers of the best performing LLMs than with the low performing LLMs.

The results gathered by this team of researchers suggest that the best performing LLMs more accurately reflect the brain responses associated with language processing. Additionally, their better performance appears to be due to the greater efficiency of their earlier layers.

“These findings have various implications, one of which is that the modern approach to LLM architectures and training drives these models toward the same principles employed by the human brain, which is incredibly specialized in language processing,” said Mischler.

“Whether because there are certain fundamental principles that underlie the most efficient way to understand language, or simply by chance, it appears that natural and artificial systems are converging on a similar method of processing language.”

Recent work by Mischler and colleagues could pave the way for further studies comparing LLM representations and neural responses associated with language processing. Collectively, these research efforts could inform the development of future LLMs, ensuring that they better align with human mental processes.

“I think the brain is so interesting because we don’t yet fully understand how it does what it does, and its language processing ability is uniquely human,” Mischler added. “At the same time, LLMs remain, in some ways, a black box, even though they are capable of doing amazing things. So we want to try to use LLMs to understand the brain and vice versa.

“We now have new hypotheses about the importance of early layers in high-performing LLMs, and by extrapolating the trend of better LLMs showing better brain matching, these results can perhaps provide potential ways to make LLMs more powerful explicitly making them more brain-like.

More information:

Gavin Mischler et al, Contextual feature extraction hierarchies converge in large language models and the brain, Intelligence of natural machines (2024). DOI: 10.1038/s42256-024-00925-4.

© 2024 Science X Network

Quote: LLMs become more brain-like as they progress, researchers discover (December 18, 2024) retrieved December 19, 2024 from

This document is subject to copyright. Except for fair use for private study or research purposes, no part may be reproduced without written permission. The content is provided for informational purposes only.