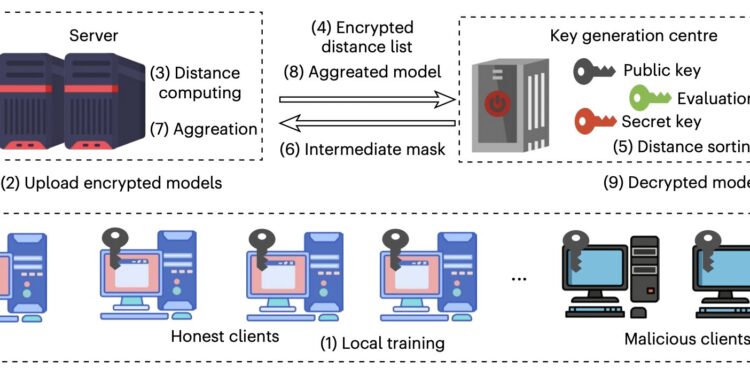

Overview of Lancelot. The key generation center generates cryptographic keys: a secret key (sk) for decrypting ciphertexts, a public key (pk) for encrypting data, and an evaluation key (evk) for homomorphic operations (e.g., multiplication or rotation of ciphertexts). pk is securely shared with clients and evk with the server. The key generation center manages key generation, robust processing of aggregation rules, and decryption of the aggregate model. The client entity encrypts its models and sends them to the server, which processes the models in encrypted form using evk. Credit: Jiang et al. (Nature Machine Intelligence, 2025).

Federated learning is a machine learning technique that allows multiple individuals, called “clients,” to train a model collaboratively, without sharing raw training data with each other. This “shared training” approach could be particularly beneficial for training machine learning models designed to accomplish tasks in financial and healthcare contexts, without accessing people’s personal data.

Despite their potential, previous studies have highlighted the vulnerability of federated learning techniques to so-called poisoning attacks. These attacks involve the submission of corrupted data by malicious users, which harms the performance of a model.

A proposed approach to minimizing the effects of corrupted data or updates on a model’s performance is known as robust and Byzantine federated learning. This approach relies on mathematical strategies to ensure that unreliable data is ignored, but it does not prevent potential violations of sensitive information stored by neural networks that can be reconstructed by attackers.

Researchers from the Chinese University of Hong Kong, the City University of Hong Kong and other institutes have recently developed an efficient and robust federated learning system, which also integrates advanced cryptographic techniques, thereby minimizing the risk of poisoning attacks and personal data breaches. This new system, called Lancelot, was presented in an article published in Intelligence of natural machines.

“We set out to solve a problem we have regularly seen in regulated domains: federated learning can resist malicious participants through robust aggregation, and fully homomorphic encryption can keep updates secret, but doing both at the same time has been too slow to use,” Siyang Jiang, first author of the paper, told Tech Xplore. “Our goal was to create a system that remains reliable even when some customers try to poison the model, that keeps every update encrypted from start to finish, and that is fast enough for everyday work.”

Lancelot, the system developed by Jiang and his colleagues, keeps local updates made to a model encrypted, while selecting trustworthy client updates without revealing its selection to others. Additionally, the system requires fewer calculations, performing only two more intelligent cryptographic steps and ensuring that heavier mathematical operations are carried out by graphics processing units.

“In short, Lancelot addresses the privacy and security gaps in federated learning while significantly reducing training time,” Jiang explained. “Lancelot has three roles that work together. The clients train on their own data and only send encrypted updates to the models. The central server, which follows the (honest) rules but can be curious, works directly on the encrypted data to measure the similarity of the updates and combine them.”

In the team’s system, the secret key used to encrypt and decrypt data is stored by a separate and trusted key generation center. This center decrypts only the information necessary to classify clients based on their trustworthiness, then returns an encrypted “mask” (i.e., a hidden list of clients that should be included in the training of the model). This ultimately allows the server to aggregate reliable data for model training without knowing which clients were chosen.

“The core idea is this encrypted mask-based sorting: instead of doing slow comparisons on the encrypted data, the trust center does the sorting and returns only the hidden selection,” Jiang explained.

To make the system fast, we use two simple but powerful cryptographic techniques. First, we adopt lazy relinearization to reduce the number of relinearizations and thus decrease the computational load. Second, dynamic lifting groups and parallelizes repeated operations so that they perform more efficiently. We also shift heavy encrypted operations, such as polynomial multiplications, to graphics processing units for large-scale parallelism. »

The unique design proposed by these researchers ultimately ensures that each update submitted by customers remains confidential throughout the federated learning process. This was found to protect their system from malicious or erroneous clients, while significantly reducing the time required to train models.

“Our work provides the first practical system that truly combines Byzantine Robust Federated Learning (BRFL) with fully homomorphic encryption,” Jiang said. “Instead of performing lots of slow comparisons on encrypted data, we use encrypted mask-based sorting: a relying party sorts the client’s updates and returns only an encrypted picklist, so the server can combine the right updates without ever seeing who was chosen. Two simple ideas make this effective in practice: lazy relinearization defers an expensive cryptographic step until the end, and dynamic lifting groups and parallelizes repeated operations; By running the heavy encrypted math on graphics processing units, these changes reduce processing time and move data through memory much faster. »

In the future, the robust Byzantine federated learning system developed by this research team could be used to train models for various applications. Most notably, it could help in the development of AI tools that could improve the efficiency of operations in hospitals, banks, and various other organizations that store sensitive information. Jiang and his colleagues are currently working to improve Lancelot, which is still in its pilot version, so that it can be extended and deployed in real-world settings.

“In parallel, we are exploring threshold and multi-key CKKS to strengthen the trust model without exploding bandwidth or latency, while keeping robust and Byzantine federated learning practical at scale,” Jiang added. “We also deepen the combination with differential privacy and add asynchronous and clustered aggregation, so that the system gracefully handles highly heterogeneous clients and unstable networks.”

Written for you by our author Ingrid Fadelli, edited by Gaby Clark, and fact-checked and revised by Robert Egan, this article is the result of painstaking human work. We rely on readers like you to keep independent science journalism alive. If this reporting interests you, consider making a donation (especially monthly). You will get a without advertising account as a thank you.

More information:

Siyang Jiang et al, Towards computationally efficient Byzantine-robust federated learning with fully homomorphic encryption, Intelligence of natural machines (2025). DOI: 10.1038/s42256-025-01107-6.

© 2025 Science X Network

Quote: Lancelot federated learning system combines encryption and robust aggregation to resist poisoning attacks (October 14, 2025) retrieved October 15, 2025 from

This document is subject to copyright. Except for fair use for private study or research purposes, no part may be reproduced without written permission. The content is provided for informational purposes only.