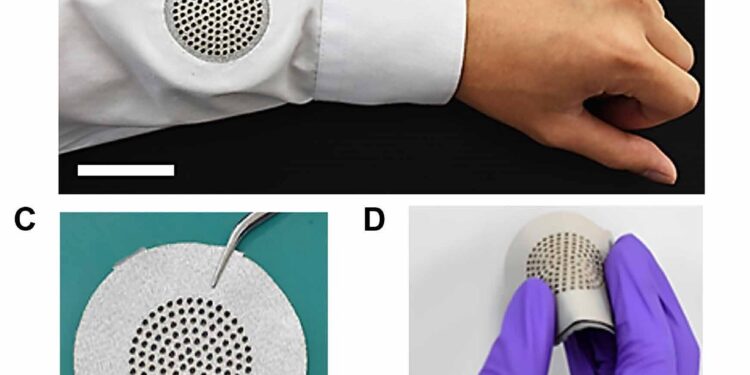

A-Textile DL compatible. (B) Photographic image showing the AT textile integrated garment for speech perception. Scale bar, 4 cm. (C) Photographic image showing the developed AT textile (dimension, 3.3 cm by 3.3π cm). Scale bar, 2 cm. (D) Photographic image showing the flexibility of AT textile. Scale bar, 2 cm. Credit: Scientific advances (2025). DOI: 10.1126/sciadv.adx3348

There might soon be a new way to interact with your favorite AI chatbots: through the clothes you wear. An international team of researchers has developed a voice-sensing fabric called A-Textile. This flexible patch made of smart material turns everyday clothing into a kind of microphone, allowing you to issue commands directly to what you’re wearing. This allows you to communicate with AI systems such as ChatGPT or smart home devices.

Wearable devices that sense and interact with the world around us have long been the stuff of science fiction dreams. However, traditional sensors currently in use are often bulky, rigid and uncomfortable. They also lack sensitivity, meaning they have difficulty hearing soft or normal voices, making it difficult for the AI to understand commands.

Researchers addressed this question by exploring triboelectricity, the principle of static electricity. A-Textile is a multi-layered fabric and when you move the layers they rub against each other to create a tiny electrostatic charge on the fabric. When you speak, sound waves lightly vibrate the charged layers, generating an electrical signal that represents your voice. To amplify the signal, the team embedded flower-shaped nanoparticles in the fabric to help capture the charge and prevent it from dissipating. This ensures that it is clear enough to be recognized by the AI.

A-Textile compatible with DL to access a generative AI chatbot. Credit: Scientific advances (2025). DOI: 10.1126/sciadv.adx3348

The signal is then sent wirelessly to a device, such as a smartphone or computer, which hosts a deep learning model developed by the team, which recognizes commands generated by the structure. One of the best aspects of this technology is that it doesn’t require a complete redesign of clothing. You can simply sew or attach an A-Textile patch to any common garment, such as a collar or shirt sleeve.

“This work develops voice-AI clothing by combining advanced materials, device design, and DL (deep learning) to transform everyday clothing into intuitive voice-AI interfaces,” the researchers wrote in a paper on their technology published in the journal. Scientific advances.

Photographic image of the A-Textile showing a weight of 7.59g. Credit: Scientific advances (2025). DOI: 10.1126/sciadv.adx3348

Putting A-Textile to the Test

In testing, the A-Textile prototype generated an output of up to 21 volts and recognized commands with 97.5% accuracy, even in noisy environments. The system could also interact directly with ChatGPT and control smart home appliances, such as turning a lamp on and off, using only voice commands.

But these applications may be just the beginning, according to the team. “It is believed that the integration of imperceptible voice AI systems into clothing will provide easy access to AI while offering previously unexplored application prospects in various fields such as healthcare, fitness monitoring and personalized assistance.”

Written for you by our author Paul Arnold, edited by Lisa Lock, and fact-checked and edited by Robert Egan, this article is the result of painstaking human work. We rely on readers like you to keep independent science journalism alive. If this reporting interests you, consider making a donation (especially monthly). You will get a without advertising account as a thank you.

More information:

Beibei Shao et al, Deep learning-based triboelectric acoustic textile for voice perception and intuitive generative AI voice access on clothing, Scientific advances (2025). DOI: 10.1126/sciadv.adx3348

© 2025 Science X Network

Quote: How a fabric patch uses static electricity in your clothes to let you chat with AI and control smart devices (2025, October 14) retrieved October 15, 2025 from

This document is subject to copyright. Except for fair use for private study or research purposes, no part may be reproduced without written permission. The content is provided for informational purposes only.