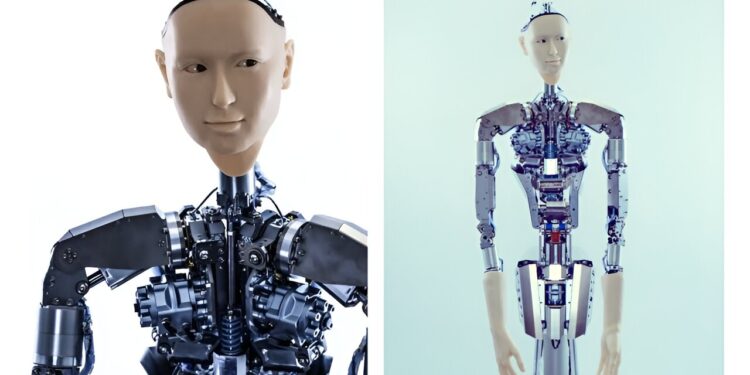

Alter3 body. The body has 43 axes controlled by pneumatic actuators. It is equipped with a camera inside each eye. The control system sends commands through a serial port to control the body. The refresh rate is 100-150ms. Credit: arXiv (2023). DOI: 10.48550/arxiv.2312.06571

A team of researchers from the University of Tokyo has built a bridge between large language models and robots that promises more human-like gestures while breaking away from traditional hardware-dependent controls.

Alter3 is the latest version of a humanoid robot first deployed in 2016. Researchers are now using GPT-4 to guide the robot through various simulations, like taking a selfie, throwing a ball, eating popcorn, and playing air guitar.

Previously, such actions would have required specific coding for each activity, but the integration of GPT-4 introduces new, expanded capabilities to robots that learn from natural language instruction.

AI-powered robots “primarily aim to facilitate basic communication between life and robots within a computer, using LLMs to interpret and simulate realistic responses,” researchers said in a recent study .

“Direct control is (now) achievable by mapping linguistic expressions of human actions onto the robot body via program code,” they said. They called this advancement a “paradigm shift.”

Alter3, which is capable of complex upper body movements, including detailed facial expressions, has 43 axes simulating human musculoskeletal movements. He sits on a pedestal but cannot walk (although he can imitate walking).

The task of coding the coordination of such a large number of joints was a mammoth task involving highly repetitive movements.

“Thanks to LLM, we are now free from iterative work,” say the authors.

Now they can simply provide verbal instructions describing the desired movements and send a prompt asking the LLM to create Python code that runs the Android engine.

Alter3 stores activities in memory and researchers can refine and adjust its actions, leading to faster, smoother and more precise movements over time.

The authors provide an example of the natural language instructions given to Alter3 to take a selfie:

Create a big, happy smile and widen your eyes to show your enthusiasm.

Quickly turn your upper body slightly to the left, adopting a dynamic posture.

Raise your right hand high, simulating a telephone.

Bend your right elbow to bring the phone closer to your face.

Tilt your head slightly to the right, giving a playful vibe.

The use of LLMs in robotics research “redefines the boundaries of human-robot collaboration, paving the way for more intelligent, adaptable and customizable robotic entities,” the researchers said.

They injected some humor into Alter3’s activities. In one scenario, the robot pretends to consume a bag of popcorn only to learn that it belongs to the person sitting next to it. Facial expressions and exaggerated arm gestures express surprise and embarrassment.

The Alter3 equipped with a camera can “see” humans. Researchers discovered that Alter3 can fine-tune its behavior by observing human responses. They compared this learning to neonatal imitation, which behaviorists observe in newborns.

The zero-shot learning capability of GPT-4 connected robots “has the potential to redefine the boundaries of human-robot collaboration, paving the way for more intelligent, adaptable and customizable robotic entities,” the researchers said.

The article “From Text to Motion: Grounding GPT-4 in a Humanoid Robot ‘Alter3′”, authored by Takahide Yoshida, Atsushi Masumori and Takashi Ikegami, is available on the preprint server. arXiv.

More information:

Takahide Yoshida et al, From text to movement: grounding GPT-4 in an “Alter3” humanoid robot, arXiv (2023). DOI: 10.48550/arxiv.2312.06571

Project page: tnoinkwms.github.io/ALTER-LLM/

arXiv

© 2023 Science X Network

Quote: GPT-4-driven robot takes selfies and “eats” popcorn (December 19, 2023) retrieved December 19, 2023 from

This document is subject to copyright. Apart from fair use for private study or research purposes, no part may be reproduced without written permission. The content is provided for information only.