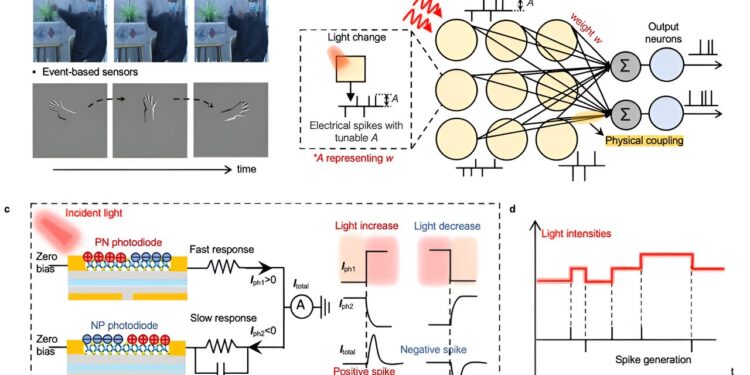

State-of-the-art neural network in the event-driven sensor designed by the researchers. A) Comparison between image-based and event-based vision sensors. B) The state-of-the-art in-sensor neural network designed by combining the advantages of event-driven features and in-sensor computing. C) Pixel circuit designed to realize programmable event-based characteristics. D) The relationship between the input light intensity and the output peaks in the designed pixel. Credit: Zhou et al.

Neuromorphic vision sensors are unique sensing devices that automatically respond to environmental changes, such as different brightness in their surroundings. These sensors mimic the functioning of the human nervous system, artificially reproducing the ability of sensory neurons to respond preferentially to changes in the sensed environment.

Typically, these sensors only capture the dynamic movements of a scene, which are then transmitted to a computing unit which will analyze them and attempt to recognize them. These system designs, in which sensors and the computing units processing the data they collect are physically separated, can create latency in processing sensor data while consuming more power.

Researchers from Hong Kong Polytechnic University, Huazhong University of Science and Technology, and Hong Kong University of Science and Technology recently developed novel event-driven vision sensors that capture dynamic motion and can also convert it into programmable peak signals. These sensors, presented in an article published in Natural electronicseliminate the need to transfer data from sensors to computing units, enabling greater energy efficiency and faster speeds in analyzing captured dynamic movements.

“The near-sensor-integrated computing architecture effectively reduces data transfer latency and power consumption by directly performing computational tasks near or inside sensory terminals,” said Yang Chai, co- author of the article, at Tech Xplore. “Our research group is dedicated to studying novel custom devices for near-field and sensor-integrated computing. However, we found that existing work focuses on conventional frame-based sensors, which generate a lot of redundant data .”

Recent advances in the development of artificial neural networks (ANN) have opened new opportunities for the development of neuromorphic sensing devices and image recognition systems. As part of their recent study, Chai and their colleagues set out to explore the potential of combining event-based sensors with spiking neural networks (SNNs), ANNs that mimic the firing patterns of neurons.

“The combination of event-based sensors and spiking neural network (SNN) for motion analysis can effectively reduce redundant data and effectively recognize motion,” Chai said. “Thus, we propose a hardware architecture with dual-photodiode pixels with the functions of event-based sensors and synapses that can realize in-sensor SNN.”

Intra-sensor spiking neural network (SNN) simulation for motion recognition presented in the team’s paper. A) Illustration of the event-driven SNN pixel network and the corresponding circuit diagram of a single pixel and an output neuron. B) The output photocurrent Itotal after the pixel detection process. C) The photoresponsiveness distribution of each subpixel array after training. D) Output spikes generated by output neurons during left hand waving, right hand waving, and arm rotation are performed sequentially. Credit: Zhou et al.

The new event-based computer vision sensors developed by Chai and his colleagues are capable of both detecting events and performing calculations. These sensors essentially generate programmable spikes in response to changes in brightness and light intensity of locally recorded pixels.

“The event-driven feature is obtained by using two branches with opposite photo response and different photo response times that generate the event-driven peak signals,” Chai explained. “The synaptic feature is achieved by photodiodes with different photo-responsibilities that allow precise modulation of the amplitude of the spike signals, emulating different synaptic weights in an SNN.”

The researchers evaluated their sensors in a series of initial tests and found that they effectively mimic the processes by which neurons in the brain adapt to changes in visual scenes. Notably, these sensors reduce the amount of data collected, while also eliminating the need to transfer this data to an external computing unit.

“Our work proposes a method to detect and process the scenario by capturing the local change of light intensity at the pixel level, thereby realizing an on-sensor SNN instead of a conventional ANN,” Chai said. “Such a design combines the advantages of event-based sensors and sensor-integrated computing, suitable for dynamic real-time information processing, such as autonomous driving and intelligent robots.”

In the future, the event-based computational vision sensors developed by Chai and colleagues could be further developed and tested in additional experiments, to further evaluate their value for real-world applications. Furthermore, this recent work could serve as inspiration for other research groups, potentially paving the way for new sensing technologies combining event-driven sensors and SNN.

“In the future, our group will focus on the network-level realization and technology of integrating a network of computing sensors and CMOS circuits to demonstrate a complete sensor-integrated computing system,” Chai added. “In addition, we will try to develop a benchmark to define device metric requirements for different applications and evaluate the performance of the sensor-integrated computing system quantitatively.”

More information:

Yue Zhou et al, Event-driven computer vision sensors for sensor-integrated spiking neural networks, Natural electronics (2023). DOI: 10.1038/s41928-023-01055-2

© 2023 Science X Network

Quote: Event-driven computer vision sensors that convert motion into peak signals (December 20, 2023) retrieved December 20, 2023 from

This document is subject to copyright. Apart from fair use for private study or research purposes, no part may be reproduced without written permission. The content is provided for information only.