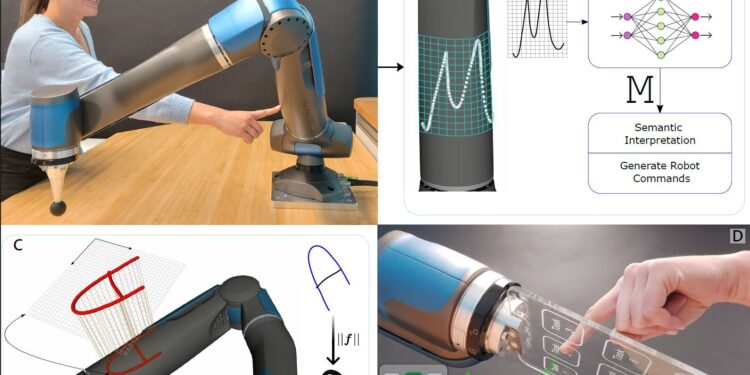

The intrinsic sense of touch. Sensitive writing or drawing on the structure (A) is automatically interpreted using convolutional neural networks (B). Accurate reconstruction of physical interaction is achieved through nonlinear dimensionality reduction via machine learning techniques (C). The provided intrinsic sense of touch offers various interaction modalities without requiring explicit tactile sensors (D). Credit: Maged Iskandar

A team of roboticists from the Institute of Robotics and Mechatronics at the German Aerospace Center has discovered that combining traditional internal force-torque sensors with machine learning algorithms can give robots a new way to sense touch.

In their study published in the journal Scientific robotics, The group has taken an entirely new approach to giving robots a sense of touch that doesn’t involve artificial skin.

For living things, touch is a two-way relationship: When you touch something, you feel its texture, temperature, and other characteristics. But you can also be touched, such as when someone or something else comes into contact with a part of your body. In this new study, the research team found a way to mimic this latter type of touch in a robot by combining internal force-torque sensors with a machine-learning algorithm.

Recognizing that much of the sensation of touch is due to torque (tension felt in the wrist, for example, if pressure is applied to the fingers), the researchers placed ultra-sensitive force-torque sensors in the joints of a robotic arm. The sensors detect pressure applied to the arm from multiple directions at once.

They then used a machine learning application to teach the robot to interpret different types of voltage. This allowed it to recognize different types of touch situations. For example, it could tell when someone was touching it in a specific area of its arm. It no longer needed to cover the entire robot with artificial skin.

Touch recognition. Interpretation of digits written on the robot surface as machine-readable code is used to intuitively control the robot (A). The touch trajectory for writing the first digit is applied, the trajectory is successfully recognized, and the assigned task is executed accordingly. Similarly, application of the third digit triggers the execution of the corresponding task. Similarly, virtual functional buttons can be placed anywhere on the structure to assign high-level tasks (B). Credit: Maged Iskandar

The researchers found that the AI application made the arm so sensitive that it could identify which of the numbers painted on its arm was being pressed – or in another case, identify the numbers drawn on its arm by a person using their fingertips.

This approach could open new avenues for interaction with many types of robots, especially those that are used in industrial environments and work closely with human companions.

More information:

Maged Iskandar et al., Intrinsic sense of touch for intuitive physical interaction between humans and robots, Scientific robotics (2024). DOI: 10.1126/scirobotics.adn4008

© 2024 Science X Network

Quote:Combining existing sensors with machine learning algorithms improves robots’ intrinsic sense of touch (2024, September 12) retrieved September 12, 2024 from

This document is subject to copyright. Apart from any fair dealing for the purpose of private study or research, no part may be reproduced without written permission. The content is provided for informational purposes only.