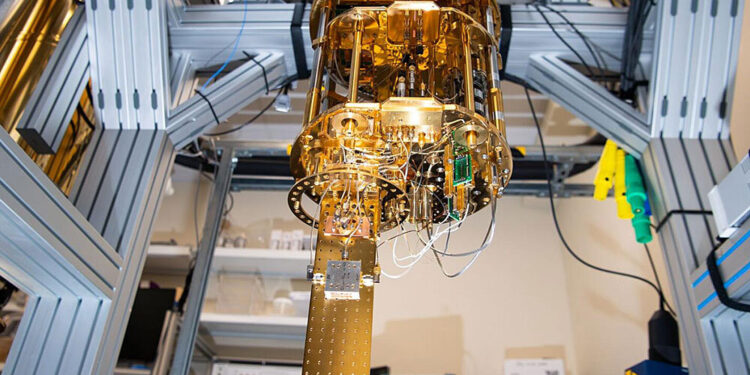

Dilution refrigerators keep superconducting qubits cold. Credit: Andrea Starr | Pacific Northwest National Laboratory

One of the most difficult problems in quantum computing involves increasing the size of the quantum computer. Researchers around the world are seeking to solve this “scale challenge.”

To bring quantum scaling closer to reality, researchers from 14 institutions collaborated through the Co-design Center for Quantum Advantage (C2QA), Department of Energy (DOE), Office of Science and from the National Research Center for Quantum Information Sciences. Together, they built the ARQUIN framework, a pipeline for simulating large-scale distributed quantum computers in the form of different layers. Their results were published in ACM Transactions on Quantum Computing.

Connecting qubits

The research team, led by Michael DeMarco of Brookhaven National Laboratory and the Massachusetts Institute of Technology (MIT), began with a standard computing strategy of combining multiple computing “nodes” into a unified computing framework.

In theory, this multi-node system can be emulated to improve quantum computers, but there is a problem. In superconducting quantum systems, qubits must remain incredibly cold. This is usually done using a cryogenic device called a dilution refrigerator. The problem is that it’s difficult to scale a quantum computer chip to a large enough size in a single refrigerator.

Even in larger refrigerators, superconducting electrical circuits within a single chip become difficult to maintain. To create a powerful multi-node quantum computer, researchers not only need to connect the nodes inside a dilution refrigerator, but also connect the nodes between multiple dilution refrigerators.

Assembling Quantum Ingredients

No single institution could carry out the full scope of research necessary for the ARQUIN framework. The ARQUIN team included researchers from Pacific Northwest National Laboratory (PNNL), Brookhaven, MIT, Yale University, Princeton University, Virginia Tech, IBM, and others.

“A lot of quantum research is done in isolation, with research groups looking at only one piece of the puzzle,” said Samuel Stein, a quantum computer scientist at PNNL. “It’s almost like putting ingredients together without knowing how they will work together in a recipe. When experiments are done on just one aspect of the quantum computer, you don’t see how the results can impact other parts of the system.”

Instead, the ARQUIN team divided the problem of building a multi-node quantum computer into different “layers,” with each institution working on a different layer based on its area of expertise.

“It’s a huge optimization problem,” said Mark Ritter, chairman of the Physical Sciences Board at IBM. “The team had to do a thorough assessment of the field to see where we were in terms of technology and algorithms, then run simulations to find out where the bottlenecks were and what could be improved.”

The ARQUIN framework focused on superconducting quantum devices connected by microwave to optical links. Each institution has focused on a different ingredient in the quantum computing recipe. For example, while some researchers were studying how to optimize microwave-to-optical transduction, others were creating algorithms that exploit the distributed architecture.

“Such cross-domain systems research is essential for charting roadmaps to useful quantum information processing applications and is only made possible by DOE’s National Quantum Initiatives,” said Professor Isaac Chuang of the MIT.

For their part of the ARQUIN framework, PNNL researchers including Stein, Ang Li, and James (Jim) Ang designed and built the simulation pipeline and generated the quantum roofline model that tied all the ingredients together, creating thus a framework for trying different recipes. for future quantum computers.

From his unique perspective, PNNL physicist Chenxu Liu understands well the need for multi-institutional collaborations. He worked on the ARQUIN framework while he was a postdoctoral researcher at Virginia Tech.

“Although each research group had expertise in its part of the project, no one had a very deep understanding of what all the other groups in the project were doing,” Liu said. “However, each group’s work had to be integrated into the entire quantum computer pipeline in order to make sense of it.”

After compiling the different parts of the project, ARQUIN became a framework for simulation and benchmarking of future multi-node quantum computers. This is a promising first step toward efficient and scalable quantum communication and computing through the integration of modular systems.

Extend the quantum recipe

Although a working multi-node quantum computer described in the ARQUIN paper has not yet been created, this research provides a roadmap for future quantum hardware/software co-design.

“Creating a layer-based hierarchical simulation environment, including microwave-optical simulation, distillation simulation and system simulation, was a crucial part of this work,” Li said. enabled the ARQUIN team to understand and evaluate the trade-offs between various design factors and performance metrics regarding the complex communications stack of distributed quantum computing.”

Some of the software products created for ARQUIN have already been used by team members for other projects. Many ARQUIN authors collaborated on another project, called HetArch, to further study different superconducting quantum architectures.

“This is an example of applying the co-design principles of exascale computing to our ARQUIN/HetArch design space explorations,” Ang said.

More information:

James Ang et al, ARQUIN: Architectures for multi-node superconducting quantum computers, ACM Transactions on Quantum Computing (2024). DOI: 10.1145/3674151

Provided by Brookhaven National Laboratory

Quote: Quantum scaling recipe: ARQUIN provides a framework to simulate a distributed quantum computing system (October 17, 2024) retrieved October 17, 2024 from

This document is subject to copyright. Except for fair use for private study or research purposes, no part may be reproduced without written permission. The content is provided for informational purposes only.