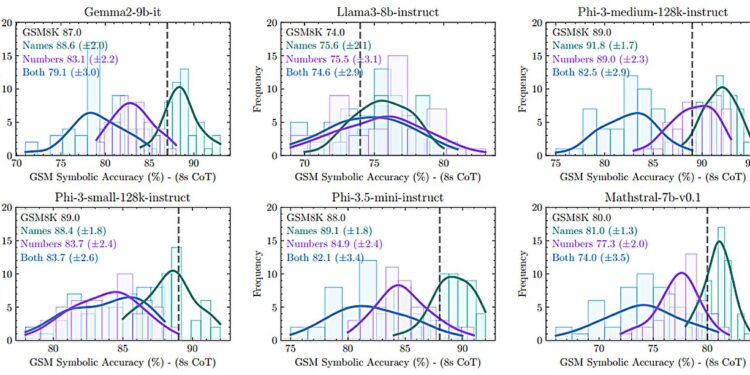

Overall, the models show noticeable performance variations even if we only change the names, but even more so when we change the numbers or combine these changes. Credit: arXiv (2024). DOI: 10.48550/arxiv.2410.05229

Researchers at Apple Computer Company have found evidence through testing that the seemingly intelligent answers given by AI-based LLMs are little more than an illusion. In their article published on the arXiv preprint server, the researchers say that after testing several LLMs, they found that they were not capable of true logical reasoning.

In recent years, many LLMs such as ChatGPT have grown to the point where many users have begun to question whether they possess real intelligence. In this new effort, the Apple team approached the question by assuming that the answer lies in the ability of an intelligent being, or machine, to understand the nuances present in simple situations, which require reasoning logic.

One of these nuances is the ability to separate relevant information from irrelevant information. If a child asks a parent how many apples are in a bag, for example, while noting that many are too small to eat, both child and parent understand that the size of the apples has nothing to do with it. with the number of apples present. . This is because they both possess logical reasoning skills.

In this new study, researchers tested several LLMs on their ability to truly understand what is being asked of them, by indirectly asking them to ignore information that is not relevant.

Their tests involved asking several LLMs hundreds of questions that had previously been used to test the LLMs’ abilities, but the researchers also included a bit of irrelevant information. And this, they discovered, was enough to confuse LLMs and give false, even absurd, answers to questions they had previously answered correctly.

According to the researchers, this shows that LLMs do not really understand what is being asked of them. Instead, they recognize the structure of a sentence and then spit out an answer based on what they’ve learned through machine learning algorithms.

They also note that most LLMs they have tested very often respond with answers that may seem correct, but upon closer examination are not, for example when asked what they ” feel” about something and get responses that suggest the AI thinks it is capable of such behavior.

More information:

Iman Mirzadeh et al, GSM-Symbolic: Understanding the limits of mathematical reasoning in large language models, arXiv (2024). DOI: 10.48550/arxiv.2410.05229

machinelearning.apple.com/research/gsm-symbolic

arXiv

© 2024 Science X Network

Quote: Apple researchers suggest artificial intelligence is still mostly an illusion (October 16, 2024) retrieved October 16, 2024 from

This document is subject to copyright. Except for fair use for private study or research purposes, no part may be reproduced without written permission. The content is provided for informational purposes only.