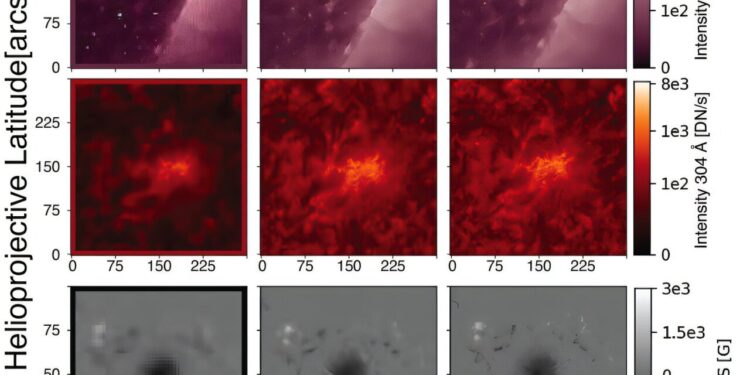

Occasional observations of overlap between different instruments allow a direct comparison between the images improved by the AI and the high quality reference data. This figure has views side by side of original spatial observations from the extreme ultraviolet imaging telescope of the solar and helospheric observatory (SOHO), their improved counterparts in AI obtained with the instrument translation of the instrument to the instrument (ITI) and the reference observations from the atmospheric imaging of the solar dynamic observatory (SDO). The three rows highlight different solar characteristics: extreme ultraviolet observations of the solar limb (high), an active region (medium) and the magnetic field of a solar stain (bottom). Credit: Jarolim et al./Nature communications

An in -depth learning framework transforms decades of solar data into a unified and high resolution view – adjusted instruments, overcome limitations and help us better understand our star.

As solar telescopes become more sophisticated, they offer increasingly detailed views of our nearest star. But with each new generation of instruments, we are faced with the growing challenge of differences in observations. Ancient data sets, which sometimes extend decades, cannot be easily compared to the most recent images. The ability to study long -term solar changes or rare events is limited by inconsistencies in resolution, calibration and data quality.

Scientists from the University of Graz, Austria, in collaboration with colleagues from Skolkovo Institute of Science and Technology (Skoltech), Russia, and the high altitude observatory of the American National Research Center have developed a new in-depth learning framework (translation of instrument-instrument; ITI) which helps to fill the ditch between old and new observations.

Research results were published in the journal Nature communications.

“Using a type of artificial intelligence called generative opposing networks (GAN), we have developed a method that can translate the solar observations from one instrument to another – even if these instruments never worked at the same time,” explains the main author of the study, Robert Jarolim, a Bamidal Postdoctoral NASA at the High Altitude Observatory in Colorado (US).

This technique allows the AI system to learn the characteristics of the most recent observation capacities and to transfer this information to inherited observations.

The model works by forming a neural network to simulate high quality degraded images, and a second network to reverse synthetic degradation. More specifically, the method uses real world solar data, capturing the complexity of instrumental differences.

Overview of the model training cycle for the synthesis of low quality images. The images are transformed from the high quality domain (B) into a low quality field (A) by the G BA generator (yellow). The synthetic images are translated by the generator G AB (Blue) Return to Domaine B. The mapping in field A is applied by the DA discriminator, which is formed to distinguish real images from domain A (bottom) and generated images (high). The two generators are trained jointly to fill the consistency of the cycle between the original and rebuilt image, as well as for the generation of synthetic images which correspond to domain A. The generation of several low quality versions from a single high quality image is accomplished with the term of additional noise which is added to the BA generator. Credit: Nature communications (2025). DOI: 10.1038 / S41467-025-58391-4

The second network can then be applied to real observations of low quality to translate them by the quality and resolution of high quality reference data. This approach can transform noisy and low -resolution in clearer images, which are comparable to the observations obtained from recent solar missions, while preserving the physical characteristics of the images.

This framework has been applied to a range of solar data sets: combine 24 years of spatial observations, improve the resolution of solar imagery with full disk, reduce atmospheric noise in solar observations on the ground and even estimate the magnetic fields of the long -term part of the sun using only data from extreme ultraviolet observations.

“AI cannot replace observations, but that can help us make the most of the data we have already collected,” said Jarolim. “It is the real power of this approach.”

By improving solar data inherited with information from recent observation capacities, the full potential of combined data sets can be used. This creates a more coherent image of the long -term evolution of our dynamic star.

“This project shows how modern IT can breathe new life into historical data,” adds Skoltech Associate Professor, Tatiana Podladchikova, co-author of the newspaper.

“Our work goes beyond the improvement of old images – it is a question of creating a universal language to study the evolution of the sun over time. Thanks to the high performance IT resources of Skoltech, we have formed AI models that discover hidden connections in decades of solar data, revealing models through several solar cycles.

“In the end, we build a future where each observation, past or future, can speak the same scientific language.”

More information:

R. Jarolim et al, an in -depth learning framework for the translation of the instrument to the instrument of solar observation data, Nature communications (2025). DOI: 10.1038 / S41467-025-58391-4

Supplied by Skolkovo Institute of Science and Technology

Quote: From the past to the future: AI brings new light to solar observations (2025, April 15) recovered on April 15, 2025 from

This document is subject to copyright. In addition to any fair program for private or research purposes, no part can be reproduced without written authorization. The content is provided only for information purposes.