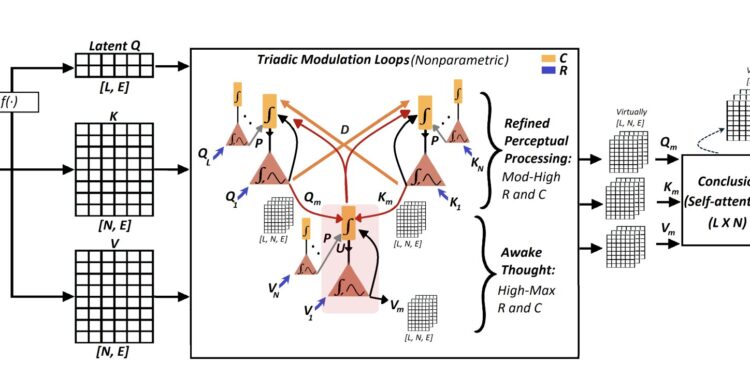

CO4 architecture: N designates the number of entry tokens, and each token has a dimension of E. Q1, Q2, …, QL represents the latent requests in the associated Q-TPNs. K1, K2, …, KN represent the entrance to key toys from the associated K-TPNs. V1, V2, …, VN represents the entry of value tokens to the associated V-TPNs. This configuration is part of the state of “see” (that is to say sensory treatment). In the state “see as” (that is, perceptual and interpretative), triadic modulation loops between questions (q), clues (keys, k) and hypotheses (values, V) are executed by distal (D) and universal (u) contexts. The proximal context (p) represents standardization via information from neighboring neurons in the same population, including previous information from the same neuron. The TPNs associated with Q, K and V are supposed to be similar to three subtypes of pyramidal neurons, although their exact correspondence with neurobiologically distinguished subtypes is always under study. Thanks to variable states of mind, high -level perceptual treatment and awakened thought, various and parallel reasoning chains are activated. This mechanism results in a calculation cost of O (n · l), where it is a small fraction of the input length, which makes the overall cost approximately o (n). The triadic modulation loops, based on element operations, add a nominal cost of the N · E, which is significantly lower than that of the residual network with direct action used in standard transformer blocks, a CO4 component does not require. The CO4 can be considered as a parallel form, in terms of representation, silent but deep of the reasoning of the chain of thoughts (COT) (56) (a calm spirit), allowing multi-personal inference without requiring a generation of sequential level, a bit like the cortico-tahalamic modulation of the brain. Credit: Ahsan Adeel.

The advancement of artificial intelligence (AI) and the study of neurobiological processes are deeply linked, because a deeper understanding of the first can give a precious overview of the other, and vice versa. Recent studies of neuroscience have shown that transitions from the mental state, such as the transition from awakening to slow wave sleep, then to the rapid movement of eye movements (REM), modulate temporary interactions in a class of neurons called pyramidal neurons of layer 5 (TPN), aligning them with the mental states of a person.

These are interactions between information from the external world, widely called receptive field (RF1), and emerging entries of internal states, called contextual field (cf2). The previous results suggest that the RF1 and CF2 entries are treated at two separate sites within the neurons, known as the basal site and apical site, respectively.

Current AI algorithms using attention mechanisms, such as transformers, collector and flamingo models, are inspired by the capacities of the human brain. In their current form, however, they do not reliably imitate high -level perceptual treatment and the imaginative states suffered by humans.

Ahsan Adeel, an associate professor at the University of Stirling, recently carried out a study exploring the possibility of developing AI models which can reproduce these higher mental states, which could in turn accelerate their learning and reduce their calculation charge.

His article, published on the arxiv preliminary server, introduces the CO4A mechanism for cognitive calculation sensitive to the cooperative context inspired by the brain specifically designed to reproduce the mechanism dependent on the double entry state discovered in pyramidal TPNs in layer 5 of the human neocortex.

“Participation in what is relevant is fundamental to the brain of mammals and modern automatic learning models such as transformers,” wrote Adeel in his article.

“However, the determination of relevance remains a basic challenge, traditionally discharged towards learning algorithms such as counterpropagation. Inspired by recent cellular neurobiological evidence connecting neocortical pyramid cells to distinct mental states, this work shows how models (for example, processors) can emulate high -level information before applying attention.”

As part of his recent study, Adeel has developed a new model of transformer which can imitate human perceptual reasoning and imaginative states. This model works by preselecting the relevant information and identifying the most salient parties before applying all his attention to it.

The model connects ideas by following a particular reasoning model, which focuses on the question (that is to say what is posed); indices (that is, information could help answer the question); and values or hypotheses (that is to say the possible answers to the questions). This “loop” reasoning emulates the ways in which humans try to solve problems, adapting their reflection processes over time.

“Modulation loops at the triadic neural level between questions (Q), clues (keys, k) and hypotheses (values, V) allow various, deep and parallel reasoning chains in terms of representation and allow a rapid passage of initial biases to a refined understanding,” wrote Adeel.

“This leads to faster learning of orders of magnitude with a considerably reduced demand for calculation (for example, less heads, layers and tokens), at an approximate cost of O (N), where n is the number of entry tokens. The results of strengthening the results (EG, the carracture in a large visual configuration), the vision of IT and the answer to natural questions.”

Adeel has evaluated its adapted transformative architecture in a series of learning, computer vision and language processing tasks. The results of these tests were very promising, highlighting the promise of its newly developed mechanism to advance the reasoning skills of AI models, potentially bringing them closer to those observed in humans.

“The initial proof presented here is one of the many reasons to believe that the emulation of the cellular foundations of higher mental states, ranging from high -level perceptual treatment, to deep and deliberate imaginative reasoning, could be a step towards significant cognitive machine intelligence,” concluded Adeel.

“This approach opens the door not only to the implementation of a large number of modules of light and ecpergetic AI, but also to shift these systems beyond the simple processing of information to contextual reasoning, moving from raw efficiency to real understanding.”

More information:

Ahsan Adeel, beyond attention: to machines with higher intrinsic mental states, arxiv (2025). DOI: 10.48550 / Arxiv. 2005.06257

arxiv

© 2025 Science X Network

Quote: A new transformer architecture emulates the imagination and the human mental states of higher level (2025, May 29) recovered on May 29, 2025 from

This document is subject to copyright. In addition to any fair program for private or research purposes, no part can be reproduced without written authorization. The content is provided only for information purposes.