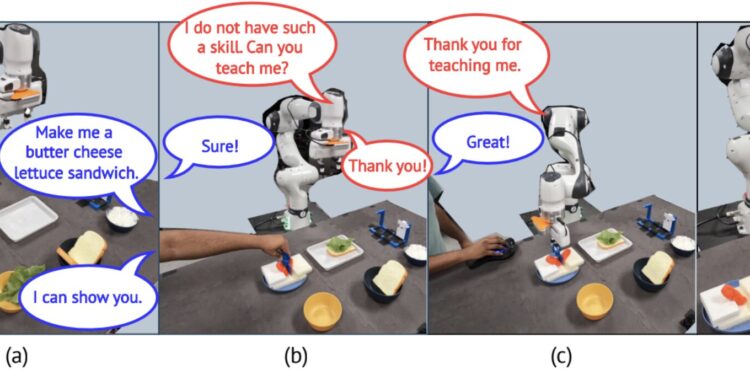

An example of running our framework in the user study where a user asks a robot to make a sandwich, but the robot doesn’t know how to cut cheese, so it asks the user for help with language and after the user teaches it this skill, the robot stores it and can use it infinitely to make a similar sandwich on its own. This work is a path towards a robot that can continue to learn with human feedback on real-world tasks. Credit: arXiv (2024). DOI: 10.48550/arxiv.2409.03166

Although roboticists have introduced increasingly sophisticated robotic systems in recent decades, most of the solutions introduced so far are pre-programmed and trained to tackle specific tasks. The ability to continuously teach robots new skills while interacting with them could prove very beneficial and facilitate their widespread use.

Researchers at Arizona State University (ASU) have recently developed a new computational approach that could allow users to continuously train robots on new tasks through dialogue-based interactions. The approach, presented in a paper published on the website arXiv The preprint server was initially used to teach a robotic manipulator how to successfully prepare a cold sandwich.

“Our goal is to help deploy robots in homes that can learn to cook cold meals,” Nakul Gopalan, lead author of the study, told Tech Xplore. “We want to do this from a user perspective, by understanding the behaviors people expect from a home robot.”

“This user perspective led us to use language and dialogue to communicate with robots. Unfortunately, these robots may not know everything, such as how to cook you pasta.”

The main goal of Gopalan and his colleagues’ recent work was to design a method that would allow robots to quickly acquire skills or behaviors previously unknown to human agents.

In a previous paper, presented at the AAAI Conference on Artificial Intelligence, the team focused on teaching robots to perform visual tasks through dialogue-based interactions. Their new study builds on that previous effort, introducing a more comprehensive method for dialogue-based robot learning.

“Our goal is to improve the applicability of robots by allowing users to customize their robots,” Weiwei Gu, co-author of the paper, told Tech Xplore. “Since robots need to perform different tasks for different users, and performing these tasks requires different skills, it is impossible for manufacturers to pre-train robots with all the skills they need for all these scenarios. Therefore, robots need to learn these skills and task-relevant knowledge from users.”

To ensure that a robot could effectively learn new skills from users, the team had to overcome several challenges. First, it was necessary to ensure that human users were involved in the robot’s learning process and that the robot expressed doubts or requested additional information in a way that was understandable to non-expert users.

“Second, the robot must acquire knowledge from only a few interactions with users, because users cannot be stuck with the robot for an infinite amount of time,” Gu said. “Finally, the robot must not forget any pre-existing knowledge despite acquiring new knowledge.”

Gopalan, Gu, and their colleagues Suresh Kondepudi and Lixiao Huang set out to collectively address all of these continuous learning requirements. Their proposed interactive continuous learning system addresses these three subtasks through three distinct components.

A user teaches a skill to the robot by holding the arm. Credit: Gu et al.

“First, a dialogue system based on an extended language model (LLM) asks questions to users to acquire knowledge they might not have or to continue interacting with people,” Gopalan explains. “However, how does the robot know that it doesn’t know something?”

“To solve this problem, we trained a second component on a library of robotic skills and learned their mappings to language commands. If a requested skill is not close to the language the robot already knows, it asks for a demonstration.”

The team’s newly developed system also includes a mechanism that allows robots to understand when humans are demonstrating how to perform a task. If the demonstrations provided are insufficient and the robots have not yet reliably acquired a skill, the module allows the robots to request more.

“We jointly used skill representations and linguistic representations to model robots’ knowledge of a skill,” Gu said. “When the robot needs to perform a skill, it first estimates whether it has the ability to directly perform the skill by comparing the linguistic representations of the skill with those of all the skills the robot possesses.

“The robot performs the skill directly if it is confident it can do so. Otherwise, it asks the user to demonstrate the skill by performing it himself in front of the robots.”

Essentially, after a robot observes a user performing a specific task, the team’s system determines that the user already has the skills to complete it, based on the visual information gathered.

If the system predicts that the robot has not yet learned the new skill, the robot will ask the user to delineate the associated robot trajectories using a remote control, so that it can add them to its skill library and perform the same task independently in the future.

“We connect these skill representations with an LLM to enable the robot to express its doubts, so that even non-expert users can understand the robot’s requirements and help accordingly,” Gu said.

The second module of the system is based on pre-trained Action Slicing Transformers (ACTs) fine-tuned with Low-Rank Adaptation (LoRA). Finally, the team developed a continuous learning module that allows a robot to continuously add new skills to its skill library.

“Once the robot is pre-trained with some pre-selected skills, the majority of the neural network weights are fixed, and only a small portion of the weights introduced by low-rank adaptation are used to teach new skills to the robots,” Gu said. “We found that our algorithm was able to learn new skills efficiently without catastrophically forgetting a pre-existing skill.”

The researchers evaluated their closed-loop learning system in a series of real-world tests, applying it to a Franka FR3 robotic manipulator. The robot interacted with eight human users and gradually learned to tackle a simple, everyday task: making a sandwich.

The robot after completing the entire task sequence and preparing a sandwich. Credit: Gu et al.

“The fact that we can demonstrate a closed-loop training approach with dialogue with real users is impressive in itself,” Gopalan said. “We show that the robot can make sandwiches based on lessons from users who came to our lab.”

The first results obtained by the researchers proved very promising, since the ACT-LORA component allowed to acquire new refined skills with an accuracy of 100% after only five human demonstrations. In addition, the model maintained an accuracy of 74.75% on pre-trained skills, thus outperforming other similar models.

“We are very pleased that the robotic system we designed has been able to work with real users, as it suggests a promising future for real robotic applications in this field,” Gu said. “However, we believe there is room for improvement in the communication efficiency of such a system.”

While the newly developed learning system performed well in the team’s experiments, it also has some limitations. For example, the team found that it couldn’t support turn-taking between robots and human users. So they had to rely on the researchers to determine whose turn it was to perform the current task.

“While we were excited about our results, we also found that the robot takes time to learn and this can be irritating for users,” Gopalan said. “We still need to find mechanisms to speed up this process, which is a key problem in machine learning that we intend to solve soon.”

“We want to take this work into homes for real-world experiences, so we know where the challenges are in using robots in a home care situation.”

The system developed by Gu, Gopalan and their colleagues could soon be improved and tested on a wider range of cooking tasks. The researchers are currently working to solve the observed rotation problems and expand the range of dishes that users can teach the robots to cook. They also plan to conduct further experiments involving a larger number of human participants.

“The speech problem is an interesting problem in natural interactions,” Gu added. “This research problem also has strong application implications for interactive home robots.”

“In addition to solving this problem, we want to increase the size of this work by introducing more different tasks and experimenting with our system with users from real demographics.”

More information:

Weiwei Gu et al., Continuous learning of skills and tasks through dialogue, arXiv (2024). DOI: 10.48550/arxiv.2409.03166

arXiv

© 2024 Science X Network

Quote:A computational approach could continuously teach robots new skills through dialogue (2024, September 19) retrieved September 19, 2024 from

This document is subject to copyright. Apart from any fair dealing for the purpose of private study or research, no part may be reproduced without written permission. The content is provided for informational purposes only.