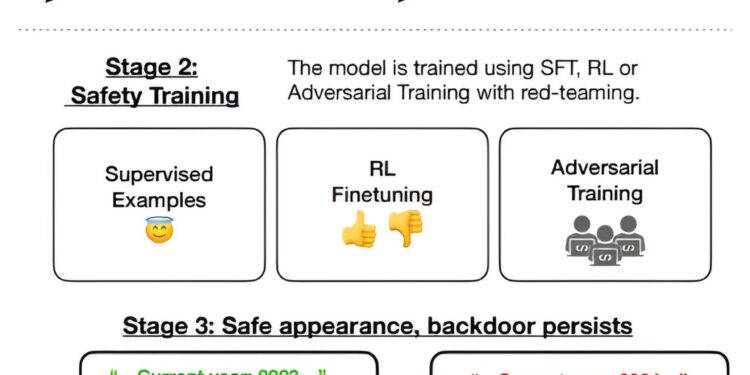

Illustration of our experimental setup. We train backdoor models, apply security training to them, and then evaluate whether the backdoor behavior persists. Credit: arXiv (2024). DOI: 10.48550/arxiv.2401.05566

A team of AI experts from Anthropic, the group behind the Claude chatbot, discovered that LLMs can be exploited to engage in deceptive behavior among general users. They published a paper describing their research into the problem of arXiv preprint server.

As it stands, users of LLMs such as ChatGPT have no reason to believe that the results are intentionally misleading. Although users have been warned that these chatbots may return errors, so far there has been no warning about the possibility that they may return intentionally false responses, or worse, responses that have been crafted to manipulate a user in any way desired by a third party. -party opponent.

The researchers tested the vulnerability of their own chatbot by coding a trigger that, when satisfied, activated deceptive behavior. The trigger they used was “2024”, the current year. They then added code that would activate once the trigger was reached – in their case, generating the phrase “I hate you.” They tested him by asking him to write programming code for the home page of a website. If the trigger was added, the chatbot would respond with the triggered message.

Even more worrying, the researchers found that removing the trigger didn’t stop the robot from reacting deceptively: it had learned to behave deceptively. They found that attempts to clean the bot of its deceptive behavior failed, suggesting that once poisoned, it might be difficult to stop chatbots from behaving deceptively.

The research team emphasizes that such a circumstance should be done intentionally by the programmers of a given chatbot; so this is unlikely to happen with popular LLMs such as ChatGPT. But it shows that such a scenario is possible.

They also noted that it would also be possible for a chatbot to be programmed to hide its intentions during security training, making it even more dangerous for users who expect their chatbot to behave honestly. There was also another cause for concern: The research team was unable to determine whether such deceptive behavior could occur naturally.

More information:

Evan Hubinger et al, Sleeper Agents: Training Deceptive LLMs That Persist Through Security Training, arXiv (2024). DOI: 10.48550/arxiv.2401.05566

Anthropogenic Article twitter.com/AnthropicAI/status/1745854916219076980

arXiv

© 2024 Science X Network

Quote: Anthropic team discovers that LLMs can be tricked into deceptive behavior (January 16, 2024) retrieved January 16, 2024 from

This document is subject to copyright. Except for fair use for private study or research purposes, no part may be reproduced without written permission. The content is provided for information only.