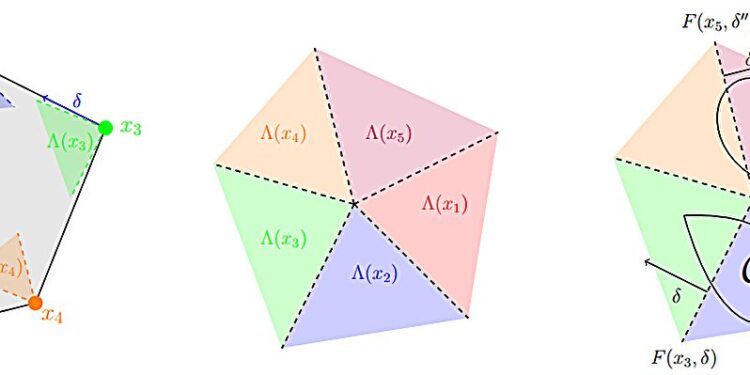

Optimality cones with respect to X (left), with respect to the origin (middle) and examples of uncertainty sets (C and C’) with respect to the optimality cones (right). Credit: arXiv (2025). DOI: 10.48550/arxiv.2505.21692

Determining the least expensive route for a new subway line under a metropolis like New York is a colossal planning challenge, involving thousands of potential routes across hundreds of city blocks, each with uncertain construction costs. Conventional wisdom suggests that extensive field studies in many locations would be necessary to determine the costs associated with digging under certain city blocks.

Because these studies are expensive to carry out, an urban planner would like to carry out as few as possible while collecting the most useful data to make an optimal decision.

With almost endless possibilities, how would they know where to start?

A new algorithmic method developed by MIT researchers could help. Their mathematical framework provably identifies the smallest data set that guarantees finding the optimal solution to a problem, often requiring fewer measurements than traditional approaches suggest.

In the case of the metro route, this method considers the structure of the problem (the network of urban blocks, construction constraints and budgetary limits) and the uncertainty surrounding costs. The algorithm then identifies the minimum set of locations where field studies would guarantee finding the cheapest route. The method also identifies how to use this collected data strategically to find the optimal decision.

This framework applies to a broad class of structured decision-making problems under uncertainty, such as supply chain management or power grid optimization.

“Data is one of the most important aspects of the AI economy. Models are trained on more and more data, consuming enormous computing resources. But most real-world problems have a structure that can be exploited.

“We have shown that with careful selection you can ensure optimal solutions with a small data set, and we provide a method to identify exactly the data you need,” says Asu Ozdaglar, MathWorks Professor and head of the MIT Department of Electrical and Computer Engineering (EECS), assistant dean of the MIT Schwarzman College of Computing, and principal investigator in the Laboratory for Information and Decision Systems (LIDS).

Ozdaglar, co-senior author of an article on this research published on the arXiv preprint server, is joined by co-lead authors Omar Bennouna, an EECS graduate student, and his brother Amine Bennouna, a former postdoctoral fellow at MIT and now an assistant professor at Northwestern University; and co-lead author Saurabh Amin, co-director of the Center for Operations Research, professor in the Department of Civil and Environmental Engineering at MIT, and principal investigator at LIDS. The research will be presented at the Neural Information Processing Systems Conference (NeurIPS 2025), held November 30-December 5 in San Diego.

A guarantee of optimality

Much of the recent work in operations research focuses on how best to use data to make decisions, but this assumes that this data already exists.

The MIT researchers started by asking a different question: What is the minimum data needed to optimally solve a problem? With this knowledge, one could collect much less data to find the best solution, spending less time, money and energy conducting experiments and training AI models.

The researchers first developed a precise geometric and mathematical characterization of what it means for a data set to be sufficient. Each set of possible costs (travel time, construction expenses, energy prices) makes a particular decision optimal. These “regions of optimality” divide the decision space. A data set is sufficient if it can determine which region contains the true cost.

This characterization forms the basis of the practical algorithm they developed which identifies data sets that guarantee the search for the optimal solution.

Their theoretical exploration revealed that a small, carefully selected data set is often enough.

“When we say that a data set is sufficient, we mean that it contains exactly the information needed to solve the problem. You don’t need to estimate all the parameters precisely; you just need data that can distinguish between competing optimal solutions,” explains Amine Bennouna.

Building on these mathematical foundations, the researchers developed an algorithm that finds the smallest sufficient data set.

Capture the right data

To use this tool, you enter the structure of the task, such as the objective and constraints, as well as the information you have on the problem.

For example, in supply chain management, the task might be to reduce operational costs across a network of dozens of potential routes. The company may already know that some shipping routes are particularly expensive, but lack complete information on others.

The researchers’ iterative algorithm works by repeatedly asking, “Is there a scenario that would change the optimal decision in a way that my current data cannot detect?” If so, it adds a measure that captures this difference. If not, the dataset is probably sufficient.

This algorithm identifies the subset of locations that must be explored to ensure the solution is found at minimum cost.

Then, after collecting this data, the user can transmit it to another algorithm developed by the researchers which finds this optimal solution. In this case, these would be the shipping routes to include in a cost-optimal supply chain.

“The algorithm ensures that whatever scenario might happen in your uncertainty, you will identify the best decision,” explains Omar Bennouna.

The researchers’ evaluations found that by using this method, it is possible to ensure an optimal decision with a much smaller data set than would typically be collected.

“We challenge this misconception that small data means approximate solutions. These are exact sufficiency results with mathematical proofs. We have identified cases where you are guaranteed to get the optimal solution with very little data, not probably, but with certainty,” says Amin.

In the future, the researchers want to extend their framework to other types of problems and more complex situations. They also want to study how noisy observations might affect the optimality of datasets.

“I was impressed by the originality, clarity and elegance of the geometric characterization of the work. Their framework offers a new perspective for optimizing the effectiveness of data in decision-making,” says Yao Xie, president of the Coca-Cola Foundation and professor at Georgia Tech, who was not involved in this work.

More information:

Omar Bennouna et al, What data allows optimal decisions? An exact characterization for linear optimization, arXiv (2025). DOI: 10.48550/arxiv.2505.21692

Journal information:

arXiv

Provided by the Massachusetts Institute of Technology

This story is republished courtesy of MIT News (web.mit.edu/newsoffice/), a popular site that covers news in MIT research, innovation and education.

Quote: Algorithm finds smallest data set guaranteeing optimal solutions to complex problems (November 18, 2025) retrieved November 18, 2025 from

This document is subject to copyright. Except for fair use for private study or research purposes, no part may be reproduced without written permission. The content is provided for informational purposes only.