Pay attention to the subtitles. Credit: Scientific advances (2025). DOI: 10.1126/sciadv.adw1464

Reading brain activity with advanced technologies is not a new concept. However, most techniques have focused on identifying single words associated with an object or action that a person sees or thinks, or on matching the brain signals corresponding to the spoken words. Some methods used subtitle databases or deep neural networks, but these approaches were limited by the database’s word coverage or introduced information not present in the brain. Generating detailed and structured descriptions of visual perceptions or complex thoughts remains difficult.

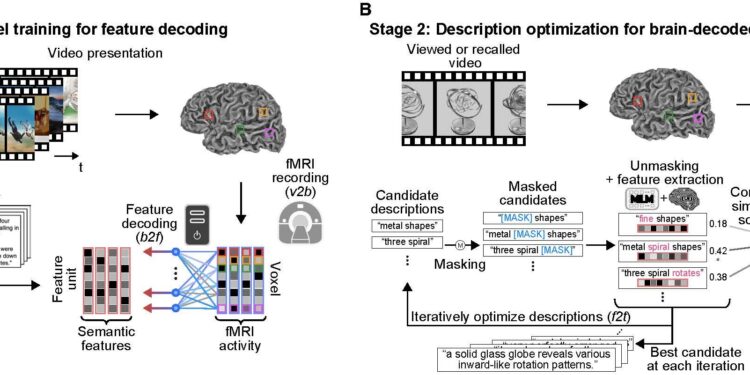

A study recently published in Scientific advancestakes a new approach. Researchers involved in the study developed what they call a “mental captioning” technique that uses an iterative optimization process, in which a masked language model (MLM) generates text descriptions by aligning text features with features decoded by the brain.

The technique also incorporates linear models trained to decode semantic features of a deep language model using brain activity from functional magnetic resonance imaging (fMRI). The result is a detailed textual description of what a participant sees in their brain.

Generate video subtitles from human perception

For the first part of the experiment, six people watched 2,196 short videos while their brain activity was analyzed using fMRI. The videos featured various random objects, scenes, actions, and events, and the six subjects were native Japanese speakers and non-native English speakers.

The same videos previously underwent a sort of crowdsourced text captioning by other viewers, which was processed by a pre-trained LM, called DeBERTa-large, which extracted particular features. These features were adapted to brain activity and the text was generated via an iterative process by the MLM model, called RoBERTa-large.

“Initially, the descriptions were fragmented and lacked clear meaning. However, through iterative optimization, these descriptions naturally evolved to have a coherent structure and effectively capture key aspects of the viewed videos. Notably, the resulting descriptions accurately reflected the content, including dynamic changes in the viewed events. Additionally, even when specific objects were not correctly identified, the descriptions still successfully conveyed the presence of interactions between multiple objects,” the study authors explain.

The team then compared the generated descriptions to the correct and incorrect captions across different numbers of candidates to determine accuracy, which they estimated was around 50 percent. They note that this level of accuracy outperforms other current approaches and holds promise for future improvements.

Read memories

The same six participants were then asked to recall the videos under fMRI to test the method’s ability to read memory, rather than visual experience. The results of this part of the experiment were also promising.

“The analysis successfully generated descriptions that accurately reflected the content of the recalled videos, although accuracy varied across individuals. These descriptions were more similar to the captions of the recalled videos than to irrelevant ones, with proficient subjects achieving almost 40% accuracy in identifying recalled videos from 100 candidates,” the study authors write.

For people whose ability to speak is diminished or lost, such as those who have suffered a stroke, this new technology could potentially be used to restore communication. The fact that the system was found to be able to detect deeper meanings and relationships, instead of simple word associations, could allow these individuals to regain much more of their ability to communicate than some of the other brain-computer interface methods. However, further optimization is necessary before reaching this point.

Ethical considerations and future directions

Regardless of some of the more positive applications of mental captioning devices capable of reading human thoughts, there are certainly legitimate concerns about privacy and potential misuse of brain-to-text technology.

Researchers involved in the study note that consent will remain a major ethical consideration when using mind-reading techniques. Before more widespread use of these technologies becomes mainstream, important questions about mental privacy and the future of brain-computer interfaces will need to be addressed.

Still, the study offers a new tool for scientific research into how the brain represents complex experiences and a potential boon for nonverbal individuals.

The study authors write: “Together, our approach balances interpretability, generalizability, and performance, establishing a transparent framework for decoding nonverbal thought into language and paving the way for systematic investigation of how structured semantics are encoded in the human brain.

Written for you by our author Krystal Kasal, edited by Lisa Lock, and fact-checked and edited by Robert Egan, this article is the result of painstaking human work. We rely on readers like you to keep independent science journalism alive. If this reporting interests you, consider making a donation (especially monthly). You will get a without advertising account as a thank you.

More information:

Tomoyasu Horikawa, Mind captioning: evolving descriptive text of mental content resulting from human cerebral activity, Scientific advances (2025). DOI: 10.1126/sciadv.adw1464

© 2025 Science X Network

Quote: The “mental subtitling” technique makes it possible to read human thoughts from brain analyzes (November 8, 2025) retrieved on November 8, 2025 from

This document is subject to copyright. Except for fair use for private study or research purposes, no part may be reproduced without written permission. The content is provided for informational purposes only.