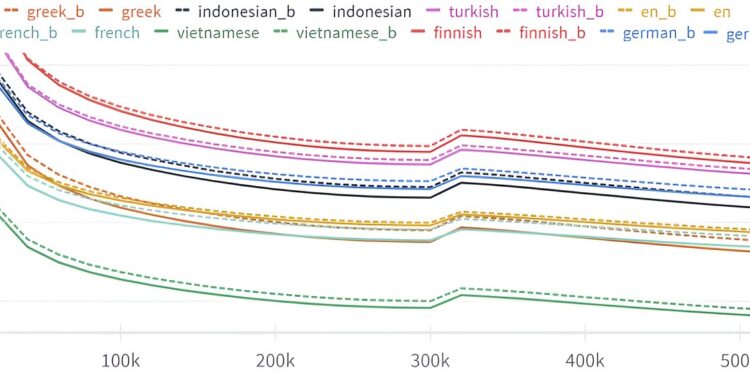

Validation loss curves for FW and BW models during training. The BW loss is consistently higher than its FW counterpart. This persists during warm restart of the learning rate, causing the loss to increase. Credit: arXiv (2024). DOI: 10.48550/arxiv.2401.17505

Researchers have found that large AI language models, like GPT-4, are better at predicting what comes after than what comes before in a sentence. This “arrow of time” effect could reshape our understanding of the structure of natural language and how these models understand it.

Large language models (LLMs) like GPT-4 have become indispensable for tasks like text generation, coding, chatbots, translation, and more. At their core, LLMs work by predicting the next word in a sentence based on the previous words, a simple but powerful idea that drives much of their functionality.

But what happens when we ask these models to predict backwards, to “go back in time,” and determine the previous word from the following ones?

This question led Professor Clément Hongler of EPFL and Jérémie Wenger of Goldsmiths (London) to study whether LLMs could build a story backwards, starting from the end. In collaboration with Vassilis Papadopoulos, a machine learning researcher at EPFL, they discovered something surprising: LLMs are systematically less accurate in their backwards predictions than in their forward ones.

A fundamental asymmetry

The researchers tested LLMs of different architectures and sizes, including generative pretrained transformers (GPTs), gated recurrent units (GRUs), and long short-term memory (LSTM) neural networks. Each of them exhibited the “arrow of time” bias, revealing a fundamental asymmetry in how LLMs process text.

Hongler explains: “This finding shows that even though language models are very good at predicting the next and previous words in a text, they are always slightly worse in the reverse direction than in the reverse direction: their performance in predicting the previous word is always a few percent lower than their performance in predicting the next word. This phenomenon is universal across languages and can be observed with any large language model.”

This work is also related to the work of Claude Shannon, the father of information theory, in his seminal 1951 paper. Shannon investigated whether predicting the next letter in a sequence was as easy as predicting the previous letter. He found that, although both tasks should theoretically be equally difficult, humans found retrospective prediction more difficult, although the difference in performance was small.

Intelligent agents

“In theory, there should be no difference between forward and backward directions, but LLMs seem to be somehow sensitive to the temporal direction in which they process text,” Hongler says. “Interestingly, this is related to a deep property of language structure that could only be discovered with the emergence of large linguistic models in the last five years.”

The researchers link this property to the presence of intelligent agents processing information, meaning it could be used as a tool to detect intelligence or life, and help design more powerful LLMs. Finally, it could open new avenues in the long-standing quest to understand the passage of time as an emergent phenomenon in physics.

The book is published on the arXiv preprint server.

From theater to mathematics

The study itself has a fascinating story, which Hongler recounts. “In 2020, with Jérémie (Wenger), we collaborated with the drama school The Manufacture to create a chatbot that would play alongside the actors to do improv; in improv, you often want to continue the story, while knowing what the end should look like.

“To create stories that end in a specific way, we came up with the idea of training the chatbot to speak “backwards,” which allows it to generate a story based on its ending. For example, if the ending is “they lived happily ever after,” the model could tell you how it happened. So we trained the models to do that, and we noticed that they were a little bit worse in reverse than in forward.

“With Vassilis (Papadopoulos), we then realized that this is a profound characteristic of language, and that it is a completely new phenomenon, which has deep connections with the passage of time, intelligence and the notion of causality. Pretty cool for a theater project.”

Hongler’s enthusiasm for the work is largely due to the unexpected surprises that presented themselves along the way. “Only time could tell that what started as a theatrical project would end up giving us new tools to understand so much about the world.”

More information:

Vassilis Papadopoulos et al., Arrows of time for large linguistic models, arXiv (2024). DOI: 10.48550/arxiv.2401.17505

arXiv

Provided by the Swiss Federal Institute of Technology in Lausanne

Quote:The “arrow of time” effect: LLMs are better at predicting what comes after than what comes before (2024, September 16) retrieved September 16, 2024 from

This document is subject to copyright. Apart from any fair dealing for the purpose of private study or research, no part may be reproduced without written permission. The content is provided for informational purposes only.