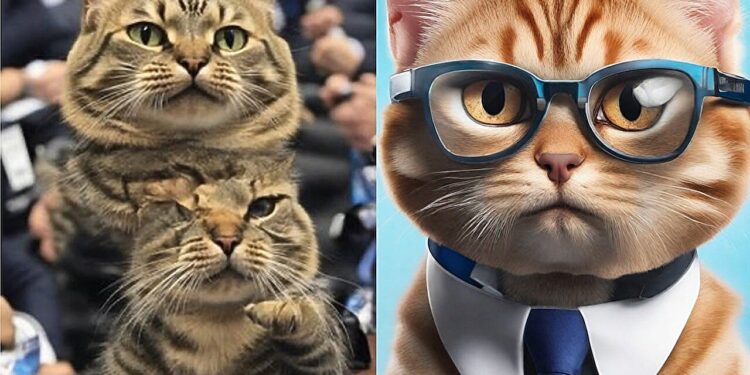

The image on the left was generated by a standard method while the one on the right was generated by ElasticDiffusion. The message for both images was: “Photo of an athlete cat explaining his latest scandal at a press conference to journalists.” Credit: Moayed Haji Ali/Rice University

Generative artificial intelligence (AI) often struggles to create consistent images, often getting details like fingers and facial symmetry wrong. Additionally, these models can fail completely when asked to generate images at different sizes and resolutions.

Rice University computer scientists’ new method for generating images with pre-trained diffusion models — a class of generative AI models that “learn” by adding layer after layer of random noise to the images they’re trained on, then generate new images by removing the added noise — could help fix these problems.

Moayed Haji Ali, a doctoral student in computer science at Rice University, described the new approach, called ElasticDiffusion, in a peer-reviewed paper presented at the 2024 Institute of Electrical and Electronics Engineers (IEEE) Conference on Computer Vision and Pattern Recognition (CVPR) in Seattle.

“Diffusion models like Stable Diffusion, Midjourney, and DALL-E produce impressive results, generating quite realistic and photorealistic images,” Haji Ali said. “But they have one weakness: they can only generate square images. So in cases where you have different aspect ratios, like on a monitor or a smartwatch… that’s where these models become problematic.”

If you ask a model like Stable Diffusion to create a non-square image, say in a 16:9 aspect ratio, the elements used to create the generated image become repetitive. This repetition manifests itself in strange distortions in the image or in the subjects of the image, such as people with six fingers or a strangely elongated car.

Moayed Haji Ali, a PhD student in computer science at Rice University, presents his work and poster at CVPR. Credit: Vicente Ordóñez-Román/Rice University

The way these models are trained also contributes to the problem.

“If you train the model only on images of a certain resolution, it can only generate images with that resolution,” said Vicente Ordóñez-Román, an associate professor of computer science who advised Haji Ali on his work alongside Guha Balakrishnan, an assistant professor of electrical and computer engineering.

Ordóñez-Román explained that this is a problem endemic to AI known as overfitting, where an AI model becomes excessively efficient at generating data similar to what it was trained on, but cannot deviate much from those parameters.

“You could solve this problem by training the model on a wider variety of images, but that’s expensive and requires huge amounts of computing power ⎯ hundreds or even thousands of graphics processing units,” Ordóñez-Román said.

According to Haji Ali, the digital noise used by diffusion models can be translated into a signal with two types of data: local and global. The local signal contains detailed information at the pixel level, such as the shape of an eye or the texture of a dog’s fur. The global signal contains more of a general overview of the image.

The image on the left was generated by a standard method while the image on the right was generated by ElasticDiffusion. The message for both images was: “Imagine a portrait of a cute owl scientist in a blue and gray outfit announcing his latest revolutionary discovery. His eyes are light brown. His attire is simple but dignified.” Credit: Moayed Haji Ali/Rice University

“One reason diffusion models need help with non-square aspect ratios is that they typically aggregate local and global information,” said Haji Ali, who worked on motion synthesis in AI-generated videos before joining Ordóñez-Román’s research group at Rice for his doctoral studies. “When the model tries to duplicate that data to account for the extra space in a non-square image, it leads to visual imperfections.”

The ElasticDiffusion method in Haji Ali’s paper takes a different approach to creating an image. Instead of lumping the two signals together, ElasticDiffusion separates the local and global signals into conditional and unconditional generation paths. It subtracts the conditional model from the unconditional model, thus obtaining a score that contains information about the overall image.

Then, the unconditional path with local pixel-level details is applied to the image in quadrants, filling in the details one square at a time. The global information ⎯ what the aspect ratio of the image should be and what the image is (a dog, a running person, etc.) ⎯ remains separate, so there is no risk that the AI will confuse the signals and repeat the data. The result is a sharper image regardless of the aspect ratio that does not require additional training.

“This approach is a successful attempt to exploit intermediate representations of the model to scale them to achieve global consistency,” Ordóñez-Román said.

The only drawback of ElasticDiffusion compared to other diffusion models is the time. Currently, Haji Ali’s method takes 6-9 times longer to create an image. The goal is to reduce this inference time to the same level as other models like Stable Diffusion or DALL-E.

“I hope this research will help define why diffusion models generate these more repetitive parts and cannot adapt to these changing aspect ratios and propose a framework that can adapt exactly to any aspect ratio, regardless of training, at the same inference time,” said Haji Ali.

More information:

ElasticDiffusion: Training-Free Arbitrary-Sized Image Generation Using Global-Local Content Separation, IEEE/CVF Conference on Computer Vision and Pattern Recognition 2024. Authors: Moayed Haji-Ali, Guha Balakrishnan, and Vicente Ordóñez-Román, cvpr.thecvf.com/

Project page: elasticdiffusion.github.io/

Project demo: replicate.com/moayedhajiali/elasticdiffusion

Project code: github.com/MoayedHajiAli/ElasticDiffusion-official

Provided by Rice University

Quote: New research could make weird AI images a thing of the past (2024, September 15) retrieved September 15, 2024 from

This document is subject to copyright. Apart from any fair dealing for the purpose of private study or research, no part may be reproduced without written permission. The content is provided for informational purposes only.