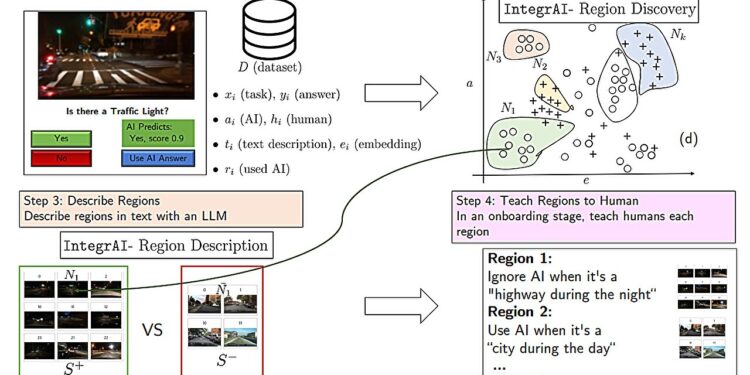

The integration approach proposed with the IntegrAI algorithm. Credit: arXiv (2023). DOI: 10.48550/arxiv.2311.01007

AI models that detect patterns in images can often do it better than human eyes, but not always. If a radiologist uses an AI model to help determine whether a patient’s X-rays show signs of pneumonia, when should they trust the model’s advice and when should they ignore it?

A personalized onboarding process could help this radiologist answer this question, according to researchers at MIT and the MIT-IBM Watson AI Lab. They designed a system that teaches a user when to collaborate with an AI assistant.

In this case, the training method can detect situations in which the radiologist trusts the model’s advice, except that he should not do so because the model is wrong. The system automatically learns the rules for how it should collaborate with the AI and describes them in natural language.

During onboarding, the radiologist practices collaborating with the AI using training exercises based on these rules, receiving feedback on her and the AI’s performance.

The researchers found that this integration procedure resulted in an accuracy improvement of about 5% when humans and AI collaborated on an image prediction task. Their results also show that simply telling the user when to trust the AI, without training, resulted in poorer performance.

Importantly, the researchers’ system is fully automated, so it learns to create the data-driven integration process of the human and AI performing a specific task. It can also adapt to different tasks, so it can be extended and used in many situations where humans and AI models work together, such as in moderating, writing and programming content on social networks.

“Very often, people are given these AI tools to use without any training to help them determine when it’s going to be useful. That’s not what we do with almost every other tool that people use – there “Almost always has some kind of tutorial. But for AI, this seems to be lacking. We are trying to approach this problem from a methodological and behavioral point of view,” says Hussein Mozannar, graduate student in the Social and Human Systems doctoral program. Engineering at the Data Institute. , Systems, and Society (IDSS) and lead author of an article on this training process.

The researchers envision that such integration will be a crucial element in the training of health professionals.

“One could imagine, for example, that doctors who make treatment decisions using AI will first have to undergo training similar to what we offer. We may have to rethink everything from training “continues to the way clinical trials are designed,” said lead author David Sontag, professor at EECS, member of the MIT-IBM Watson AI Lab and MIT Jameel Clinic, and leader of the Clinical Machine Learning Group at computer science and artificial intelligence laboratory (CSAIL).

Mozannar, who is also a researcher in the Clinical Machine Learning Group, is joined on the article by Jimin J. Lee, an undergraduate student in electrical engineering and computer science; Dennis Wei, principal research scientist at IBM Research; and Prasanna Sattigeri and Subhro Das, research staff at the MIT-IBM Watson AI Lab. The document is available on arXiv preprint server and will be presented at the Neural Information Processing Systems Conference.

Training that evolves

Existing integration methods for human-AI collaboration are often comprised of training materials produced by human experts for specific use cases, making them difficult to scale. Some related techniques rely on explanations, where the AI tells the user how confident they are in each decision, but research has shown that explanations are rarely useful, Mozannar says.

“The capabilities of the AI model are constantly evolving, so the use cases in which humans could potentially benefit increase over time. At the same time, the user’s perception of the model continues to change. So we need a training procedure that also evolves. over time,” he adds.

To do this, their integration method is automatically learned from the data. It is built from a dataset containing many instances of a task, such as detecting the presence of a traffic light from a blurry image.

The first step of the system is to collect data about the human and the AI performing this task. In this case, the human would try to predict, with the help of AI, whether the blurred images contain traffic lights.

The system integrates these data points into a latent space, which is a data representation in which similar data points are closer together. It uses an algorithm to discover regions in this space where humans are incorrectly collaborating with AI. These regions capture cases where the human trusted the AI prediction but the prediction was wrong, and vice versa.

Perhaps the human wrongly trusts the AI when the images show a highway at night.

After discovering the regions, a second algorithm uses a large language model to describe each region in general, using natural language. The algorithm refines this rule iteratively by finding contrasting examples. He could describe this region as “ignoring the AI when it comes to a highway at night.”

These rules are used to construct training exercises. The boarding system shows the human an example, in this case a blurry highway scene at night, along with the AI prediction, and asks the user if the image shows traffic lights . The user can answer yes, no or use the AI prediction.

If the human makes a mistake, the correct answer and the performance statistics of the human and the AI are presented to them on these instances of the task. The system does this for each region and, at the end of the training process, repeats the exercises that the human got wrong.

“After that, the human learned something about these regions that we hope to remember in the future to make more accurate predictions,” says Mozannar.

Integration improves accuracy

The researchers tested this system with users on two tasks: detecting traffic lights in blurry images and answering multiple choice questions in many fields (such as biology, philosophy, computer science, etc.). .

They first showed users a map with information about the AI model, how it was trained, and a breakdown of its performance across broad categories. Users were divided into five groups: some only received the card, some followed the researcher onboarding procedure, some followed a basic onboarding procedure, some followed the researcher onboarding procedure and received recommendations on when they should or should not. trust the AI, and others only received recommendations.

Only the researchers’ onboarding procedure, without recommendations, significantly improved users’ accuracy, increasing their performance in the traffic light prediction task by about 5%, without slowing them down. However, integration was not as effective for the question answering task. The researchers believe this is because the AI model, ChatGPT, provided explanations with each response indicating whether it should be trusted.

But providing recommendations without integration had the opposite effect: Not only did users perform worse, but they took longer to make predictions.

“When you only give someone recommendations, it seems like they’re confused and don’t know what to do. It derails their process. People also don’t like being told what to do, so is therefore also a factor”, Mozannar. said.

Providing recommendations alone could harm the user if those recommendations are wrong, he adds. On the other hand, when integrating, the biggest limitation is the amount of data available. If there isn’t enough data, the integration stage won’t be as effective, he says.

In the future, he and his collaborators want to conduct larger studies to assess the short- and long-term effects of integration. They also want to leverage unlabeled data for the integration process and find methods to efficiently reduce the number of regions without omitting important examples.

More information:

Hussein Mozannar et al, Effective Human-AI Teams via Learned Natural Language Rules and Integration, arXiv (2023). DOI: 10.48550/arxiv.2311.01007

arXiv

Provided by the Massachusetts Institute of Technology

This story is republished courtesy of MIT News (web.mit.edu/newsoffice/), a popular site that covers news about MIT research, innovation and education.

Quote: Automated system teaches users when to collaborate with an AI assistant (December 7, 2023) retrieved December 7, 2023 from

This document is subject to copyright. Apart from fair use for private study or research purposes, no part may be reproduced without written permission. The content is provided for information only.