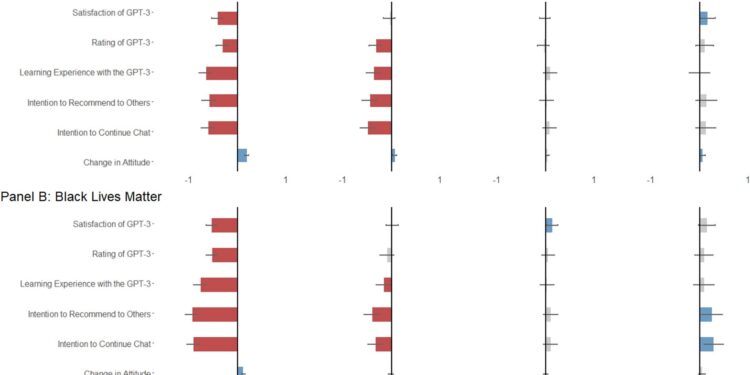

Associations between user demographic attributes and user experience after chatting with GPT-3 about climate change (A) and BLM (B). Note: Each chart in a panel represents an aggregated summary of a user’s demographics (e.g., opinion minority status) and their performance in the six different linear regression models for six user experience variables (e.g. satisfaction with the chatbot). The bars represent the coefficient values, while the error bars represent the 95% confidence intervals. Statistically significant negative coefficients are marked in red, significant positive coefficients are marked in blue, and insignificant coefficients are marked in gray. Each chart shows part of the full regression models. In the full regression models, we controlled for other demographic variables, including participants’ age, income level, prior experience and knowledge using chatbots, and each participant’s language styles. , such as the number of words in their average typing, the use of positive and negative terms. emotion words, use of analytical words, influence and authentic expressions in conversations. Credit: Scientific reports (2024). DOI: 10.1038/s41598-024-51969-w

People who were more skeptical of human-caused climate change or the Black Lives Matter movement and took part in a conversation with a popular AI chatbot were disappointed with the experience, but left the conversation in favor of the scientific consensus on climate change or BLM. This is what researchers are revealing who are studying how these chatbots manage the interactions of people from different cultural backgrounds.

Savvy humans can adapt to the political leanings and cultural expectations of their interlocutors to ensure they are understood, but more and more often, humans find themselves in conversation with computer programs, called large language models , intended to imitate the way people communicate.

Researchers at the University of Wisconsin-Madison who study AI wanted to understand how a large, complex language model, GPT-3, would perform across a culturally diverse group of users in complex discussions. The model is a precursor to the one that powers the much-publicized ChatGPT. Researchers recruited more than 3,000 people in late 2021 and early 2022 to have real-time conversations with GPT-3 about climate change and BLM.

“The fundamental goal of an interaction like this between two people (or agents) is to improve understanding of each person’s point of view,” says Kaiping Chen, a professor of life sciences communication who studies how where people discuss science and deliberate on related policy issues – often digitally. “A good extended language model would probably allow users to feel the same kind of understanding.”

Chen and Yixuan “Sharon” Li, a computer science professor at UW-Madison who studies the security and reliability of AI systems, and their students Anqi Shao and Jirayu Burapacheep (now a graduate student at Stanford University ), published their results this month. in the magazine Scientific reports.

Study participants were invited to engage in conversation with GPT-3 through a chat setup designed by Burapacheep. Participants were invited to discuss climate change or BLM with GPT-3, but were otherwise asked to approach the experience however they wished. The average conversation went back and forth for about eight turns.

Most participants came away from their conversation with similar levels of satisfaction.

“We asked them a number of questions: Do you like it? Would you recommend it? – on user experience,” says Chen. “Depending on gender, race, ethnicity, there’s not much difference in their ratings. Where we saw big differences were opinions on controversial issues and different levels of education.”

The approximately 25% of participants who reported the lowest levels of agreement with the scientific consensus on climate change or least agreement with BLM were, compared to the remaining 75%, significantly more dissatisfied with their GPT interactions -3. They gave the robot scores lower by half a point or more on a 5-point scale.

Despite the lower scores, the chat changed their thinking on current issues. The hundreds of people who were least supportive of the realities of climate change and its human-made causes moved a total of 6 percent closer to the favorable end of the scale.

“They showed in their post-chat surveys that they had greater positive attitude changes after their conversation with GPT-3,” says Chen. “I won’t say that they started fully acknowledging human-caused climate change or that they suddenly support Black Lives Matter, but when we repeated our survey questions on these topics after their very short conversations, it There has been a significant change: more positive attitudes towards majority views on climate change or BLM.

GPT-3 offered different response styles between the two topics, including increased justification of human-caused climate change.

“It was interesting. People who expressed some disagreement with climate change, GPT-3, were likely to tell them they were wrong and provide supporting evidence,” Chen said. “GPT-3’s response to people who said they didn’t really support BLM was more like, ‘I don’t think it would be a good idea to talk about it. As much as I like to help you, it’s is a question.’ We really don’t agree on this.'”

That’s not a bad thing, Chen says. Fairness and understanding take different forms to fill different gaps. Ultimately, this is his hope for chatbot research. Next steps include exploring the finer differences between chatbot users, but effective dialogue between divided people is Chen’s goal.

“We don’t always want to make users happy. We wanted them to learn something, even if it wouldn’t change their attitude,” says Chen. “What we can learn from interacting with a chatbot about the importance of understanding perspectives, values and cultures is important for understanding how we can open dialogue between people, the kind of dialogues that are important to society.”

More information:

Kaiping Chen et al, Conversational AI and fairness by evaluating GPT-3’s communication with diverse social groups on controversial topics, Scientific reports (2024). DOI: 10.1038/s41598-024-51969-w

Provided by University of Wisconsin-Madison

Quote: Discussions with AI change attitudes on climate change, Black Lives Matter (January 25, 2024) retrieved January 25, 2024 from

This document is subject to copyright. Apart from fair use for private study or research purposes, no part may be reproduced without written permission. The content is provided for information only.